Data analysis and machine learning are finding applications in many fields of fundamental sciences, and theoretical physics is no exception. My group at the Institut de Physique Théorique (IPhT) explores the opposite direction by leveraging methods developed originally in studies of materials such as glasses or disordered magnets to obtain theoretical understanding of problems in data science and machine learning.

In the term big data, the adjective big stands not only for the fact that a large hard disk is needed to store the data, but also for the fact that the dimensionality of each data point is large. A simple example is the task of linear regression: whereas we need only two parameters to fit a straight line through a set of data points, present applications usually deal with data where each point lives in a high-dimensional space and the number of fitting parameters corresponds to the dimension. Many of the theoretical challenges in statistics stem precisely from this large dimensionality. Yet models studied in statistical physics are mathematically equivalent to some of those in high-dimensional statistics.

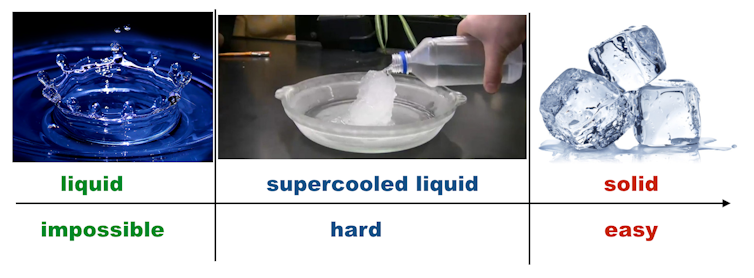

In data science, models are usually used to guide the design of algorithms. In physics, models (often the same ones) are studied with the somewhat more academic goal of understanding their behaviour per-se. In particular, statistical physics is often concerned with phase transitions, i.e., abrupt changes in behaviour. Interestingly, there is a deep correspondence between physical phases such as liquid, super-cooled liquid or glass, and solid, and regions of parameters for which a given data analysis task is algorithmically impossible, hard or easy. Quantification of these phases in various data science problems is one of the main goals of my group’s research.

Interestingly, this endeavour oriented towards understanding of models per-se inspires development of new classes of algorithms. Examples of this were developed in IPhT and include design of measurement protocols for compressed sensing inspired by nucleation in physics or a new class of spectral algorithms designed for sparsely related datasets. Currently the team holds an ERC Starting Grant to pursue this research direction focusing on various types of neural networks that arise in deep learning.

This article was published in partnership with the CEA and its new magazine Key.