As gloomy predictions foretell the end of homework, education institutions are hastily revising their policies and curricula to address the challenges posed by AI chatbots. It is true that the emergence of chatbots does raise ethical and philosophical questions. Yet, through their interactions with AI, people will inevitably enhance skills that are crucial in our day and age: language awareness and critical thinking.

We are aware that this claim contradicts the widespread worries about the loss of creativity, individual and critical thinking. However, as we will demonstrate, a shift in perspective from the ‘output’ to the ‘user’ may allow for some optimism.

Sophisticated parroting

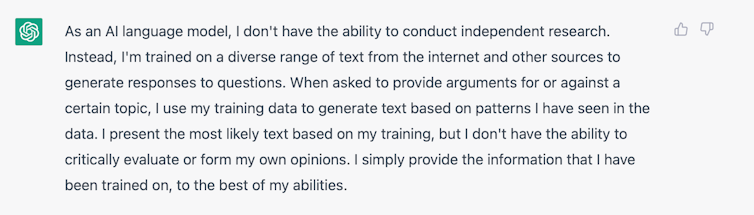

It is not surprising that the success of ChatGPT passing an MBA and producing credible academic papers has sparked worry among educators about how students will learn to form an opinion and articulate it. This is indeed a scary prospect: from the smallest everyday decisions to large-scale, high-stakes societal issues, we form our opinions through gathering information, (preferably) doing some research, thinking critically while we evaluate the evidence and reasoning, and then make our own judgement. Now cue in ChatGPT: it will evaluate the vast dataset it has been trained on, and save you the hard work of researching, thinking and evaluating. The glitch, as the bot itself admits, is that its answers are not based on independent research:“I generate text based on patterns I have seen in the data. I present the most likely text based on my training, but I don’t have the ability to critically evaluate or form my own opinions.”

Don’t be fooled by the logic of this answer: the AI application does not explain its actions and their consequences (and as we will see later, there is a big difference between the two). The world ChatGPT presents to us is based on argumentum ad populum – it considers to be true what is repeated the most. Of course, it’s not: if you go down the rabbit hole of reports on AI ‘hallucinations’, you are bound to find many stories. Our favourite is how the chatbot dreamed up the most widely cited economics paper.

This is why we agree with those who doubt that ChatGPT will take over our content creating, creative, fact-checking jobs any time soon. However convincing they are, AI-generated texts sit in a vacuum: a chatbot does not communicate the way humans do, it does not know the actual purpose of the text, the intended audience or the context in which it will be used – unless specifically told so.

A chance to sharpen our critical skills

Users need to be savvy in both prompting and evaluating the output. Prompting is a skill that requires precise vocabulary and an understanding of how language, style or genres work. Evaluation is the ability to assess the output.

Let us give an example. Imagine your task is to respond to a corporate crisis. You reach out to ChatGPT to create a corporate apology. You prompt it: Assume some responsibility. Use formal language. Should be short.

And this is where the magic happens.

You have done your prompting, but now you need to check: does this text look and sound like a corporate apology? How do people normally use language to assume or shift blame? Is the text easy to read or does it hide its true meaning behind complex language? Whose voice are we hearing in the apology?

To check if your text is right, you must know what the typical genre or style features are. You must know about crisis management strategies. Readability levels. Or how we encode agency in language (as this study found).

In academic scholarship this kind of knowledge is called language awareness. Language awareness has several levels: the first one is simply noticing language(s) and its elements. The second level is when we can identify and label the various elements, and creatively manipulate them.

Consider for example the beginning of two versions of corporate apologies ChatGPT created:

“We would like to deeply apologize for the actions of our company…”

“We would like to deeply apologize for the inconvenience our actions caused…”

A cursory read of these two may look as if both messages were apologetic, but the difference is what they apologize for. One apologizes for their actions. The other for the consequences of their actions. This small difference affects legal liability: in the second case, the company does not explicitly accept responsibility. After all, they are only sorry for the inconvenience.

Such examples like the one above can make people think about small linguistic differences and their meaning for communication. The beauty of it is that the more often we look closely at language, the more we notice what it does in communication. Once you see how a ‘fauxpology’ works, you can never unsee it.

A potential weapon against misinformation

Back to ChatGPT: as we can see, for the best results, users need to prompt it right, and then check the produced text against the prompt criteria. For this they need to understand the nuances of language, context and intended purpose.

Why is this knowledge such a big deal? Because of a third level of awareness that we have not mentioned before. This is when people realize how language creates, affects and manipulates their perceptions of reality. This knowledge is invaluable in our age of misinformation and populism when the issues society grapples with are mostly abstract and intangible. The more people know about how language works, the more they start to notice how politicians and the media create versions of the world for them through their communications.

Language awareness makes people sensitive to questionable corporate communication practices, from greenwashing to… you know, non-apologies. What is more, language awareness may help people better understand why society (doesn’t) respond to actions targeting the climate crisis. Dubbed as the largest communication failure in history, almost every aspect of the climate crisis – and how people act as a result – depends on how we talk about it.

It is impossible to predict the extent to which AI applications like ChatGPT will disrupt the world of education and work. For now, society can both prepare for the dangers of AIs and embrace their potential. In the process of learning how to interact with them well, however, people are bound to become “prompt savvy”, and with that more aware of how language works. With such language awareness comes the power to consume texts with a critical eye. A glimmer of optimism for a sustainable future is that critical reading leaves less room to manipulation and misinformation.