Humans are currently the most intelligent beings on the planet – the result of a long history of evolutionary pressure and adaptation. But could we some day design and build machines that surpass the human intellect?

This is the concept of superintelligence, a growing area of research that aims to improve understanding of what such machines might be like, how they might come to exist, and what they would mean for humanity’s future.

Oxford philosopher Nick Bostrom’s recent book Superintelligence: Paths, Dangers, Strategies discusses a variety of technological paths that could reach superintelligent artificial intelligence (AI), from mathematical approaches to the digital emulation of human brain tissue.

And although it sounds like science fiction, a group of experts, including Stephen Hawking, wrote an article on the topic noting that “There is no physical law precluding particles from being organised in ways that perform even more advanced computations than the arrangements of particles in human brains.”

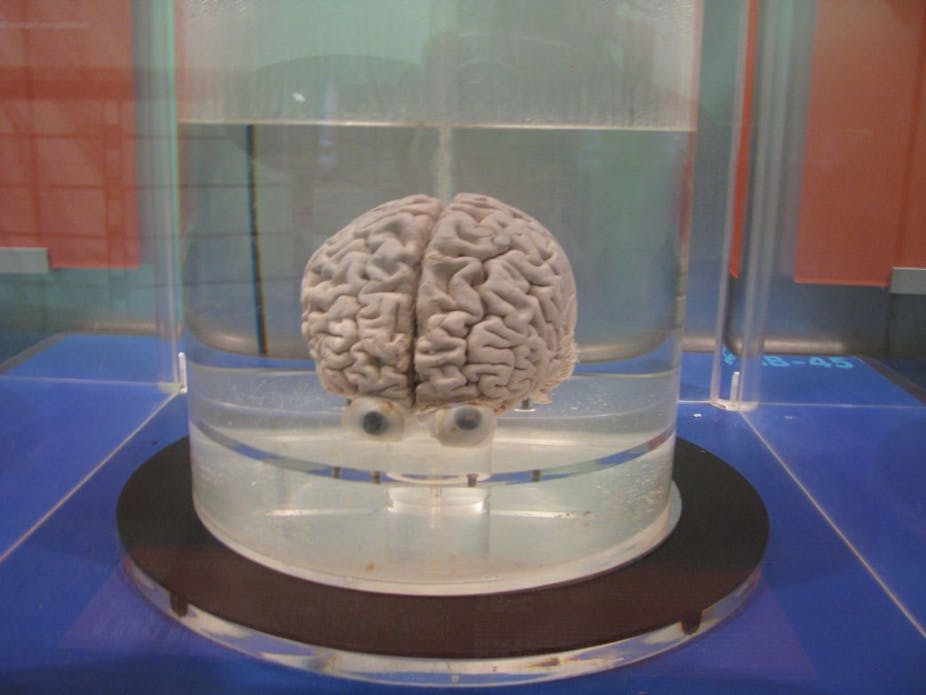

Brain as computer

The idea that the brain performs “computation” is widespread in cognitive science and AI since the brain deals in information, converting a pattern of input nerve signals to output nerve signals.

Another well-accepted theory is that physics is Turing-computable: that whatever goes on in a particular volume of space, including the space occupied by human brains could be simulated by a Turing machine, a kind of idealised information processor. Physical computers perform these same information-processing tasks, though they aren’t yet at the level of Turing’s hypothetical device.

These two ideas come together to give us the conclusion that intelligence itself is the result of physical computation. And, as Hawking and colleagues go on to argue, there is no reason to believe that the brain is the most intelligent possible computer.

In fact, the brain is limited by many factors, from its physical composition to its evolutionary past. Brains were not selected exclusively to be smart, but to generally maximise human reproductive fitness. Brains are not only tuned to the tasks of the hunter gatherer, but also designed to fit through the human birth canal; supercomputing clusters or data-centres have no such constraints.

Synthetic hardware has a number of advantages over the human brain both in speed and scale, but the software is what creates the intelligence. How could we possibly get smarter-than-human software?

Evolving intelligence 2.0

Evolution has produced intelligent entities – dogs, dolphins, humans – so it seems theoretically possible that humans could recreate the process. Methods known as “genetic” algorithms enable computer scientists and engineers to utilise the power of natural selection to discover solutions or designs with incredible efficiency.

Evolutionary algorithms keep plugging away, exploring the options, automatically assessing what works, discarding what doesn’t, and thus evolving towards the researchers’ desired outcomes. In Superintelligence, for example, Bostrom recounts a genetic algorithm’s surprising solution to a hardware design problem:

[The experimenters] discovered that the algorithm had, MacGyver-like, reconfigured [the] sensor-less motherboard into a makeshift radio receiver, using the printed circuit board tracks as an aerial to pick up signals generated by personal computers that happened to be situated nearby in the laboratory.

Of course, it is substantially more difficult to evolve a brain than a radio receiver. Bostrom takes the case of simulating the evolution of the central nervous system. A back of the napkin estimate argues that there are approximately 1025 (1 followed by 25 zeroes) neurons on our planet today and assumes that this population has been evolving for a billion years.

Current models of neurons that mimic the computation in the brain require up to about 106 calculations per second, per neuron, or about 1013 per year.

If we were to use these numbers to recreate evolution in (for example) one year of computation, it would require a computer that could perform about 1039 calculations per second – far beyond our present-day supercomputers.

It can be hard to put such large numbers into context, but the key point is that such raw computing power isn’t likely to be available to us any time soon. Bostrom notes that “even a continued century of Moore’s law would be insufficient to close this gap.”

But aside from brute force there are other ways we could close the gap. Natural evolution is wasteful in this context, since it doesn’t select only for intelligence. It’s possible that we could find many shortcuts, although it’s unclear exactly how much faster a human-directed process could arrive upon smarter-than-human digital brains.

The Star Trek vision of the future of intelligence – robots that top out at the level of mathematically-talented humans and go no further – is itself a failure of the human imagination.

In any case, the evolutionary approach is only one possible strategy. Branches of machine learning, cognitive science, and neuroscience have used our limited understanding of the human brain along with algorithms to break CAPTCHAs, translate books, and manage railway systems. Managing more abstract and strategic plans (including plans for developing AI) could be where we’re headed, and there’s little reason to believe that AI will come to an abrupt stop at the human level.