This essay is the second of a four-part series, which commemorates the anniversary of the first ever message sent across the ARPANET, the progenitor of the Internet on October 29, 1969.

In 1962, ARPA’s Command and Control Research Division became the Information Processing Techniques Office (IPTO), and the IPTO, first under J. C. R. Licklider and then under Ivan Sutherland, became a key ally to the development of computer science.

The main function of the IPTO was to select, fund and coordinate US-based research projects that focused on advanced computer and network technologies. The IPTO had an estimated annual budget of $19 million, with individual grants ranging from $500 thousand to $3 million. Following the path traced by Licklider, it was at the IPTO and under the leadership of a young prodigy named Larry Roberts that the Internet began to shape.

Until the end of the 1960s, running tasks on computers remotely meant transferring data along the telephone line. This system was essentially flawed. The analogue circuits of telephone network could not guarantee reliability, the connection remained on once activated, and performed too slowly to be considered efficient.

During these early years, it was not uncommon for whole sets of information and input to be lost in the journey from the a terminal computer to the mainframe computer (located remotely), with the whole procedure (not a simple one) having to be restarted and the information re-sent. This procedure was by all means burdensome: it was highly ineffective, costly (the line remained in use for a long period of time while computers waited for inputs) and time consuming.

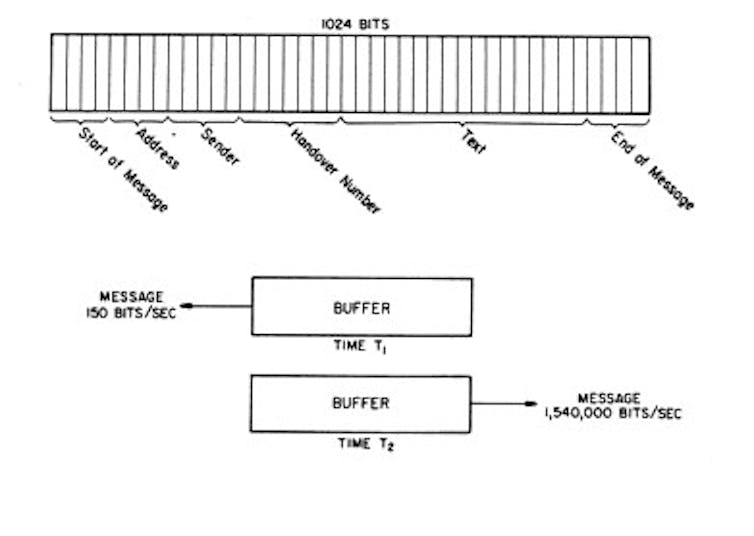

The solution to the problem was called packet-switching, a simple and efficient method to rapidly store-and-forward standard units of data (1024 bits) across a computer network. Each packet is handled as if it were a hot potato, rapidly passed from one point of the network to the next, until it reaches its intended recipient.

Like the ones sent through the post system, each packet contains information about the sender and its destination, and also carries a sequence number that allows the end-receiver to reassemble the message in its original form.

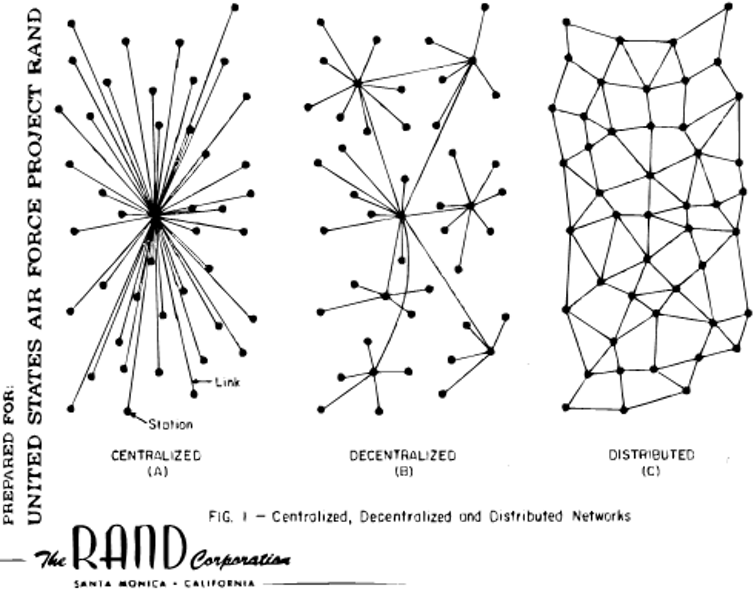

The theory behind the system was elaborated independently yet simultaneously by three researchers: Leonard Kleinrock, then at the Massachusetts Institute of Technology (MIT), Donald Davies at the National Physical Laboratory (NPL) in the UK, and Paul Baran at RAND Corporation in California. Though Davies’ own word “packet” was eventually accepted as the most appropriate term to refer to the new theory, it was Baran’s work on distributed networks that was later adopted as the blue-print of the ARPANET.

Distributed Communication Networks

RAND (Research and Development) Corporation, founded in 1946 and based in Santa Monica, California, is to this day a non-profit institution that provides research and analysis in a wide range of fields with the

aim of helping the development of public policies and improving decision-making processes. During the Cold War era, RAND researchers produced possible war scenarios (like the hypothetical aftermath of a nuclear attack by the Russians on American soil for the US government. Among other things, RAND’s researchers attempted to predict the number of casualties, the degree of reliability of the communication system and the possible danger of a black-out in the chain of command if a nuclear conflict suddenly broke out.

Paul Baran was one of the key researchers at RAND. In 1964 he published a paper titled On Distributed Communications in which he outlined a communication system resilient enough to survive a nuclear attack. Though it was impossible to build a system of communication that could guarantee the endurance of all single points, Baran posited that it was reasonable to imagine a system which would force the enemy to destroy “n of n stations”. So “if n is made sufficiently large”, Baran wrote, “it can be shown that highly survivable system structures can be built even in the thermonuclear era”.

Baran was the first to postulate that communication networks can only be built around two core structures: “centralised (or star) and distributed (or grid or mesh)”. From this he derived three possible types of networks: A) centralised, B) decentralised and C) distributed. Of the three types, the distributed was found to be far more reliable in the event of a military strike.

A and B represented types of systems where the “destruction of a single central node destroys communication between the end stations”. By contrast, the distributed network (C) was different. In theory, one could remove or destroy one of its parts without causing great harm to the economy or function of the whole network. When a part of a distributed network is no longer functioning, the task performed by that part of the network can easily be moved to a different section.

Unfortunately, Baran’s ideal network was ahead of its time. Redundancy – the number of nodes attached to each node – is a key element to increasing the strength of any distributed network.. Baran’s required level of redundancy (at least three or four nodes attached to each node) can only be properly sustained in a fully developed digital environment, which in the 1960s was not yet available. Baran was surrounded by analogue technology; we, by contrast, live now in a rapidly expanding digital age.

Thanks, but not interested

Nevertheless, Baran’s speedy store-and-forward distributed network was highly-efficient and required very little storage at node level; the entire system had an estimated cost of $60 million to support 400 switching nodes and in turn service l00,000 users. And though RAND believed in the project, it failed to find the partners with whom to build it.

First the Air Force and then AT&T turned down RAND’s proposal in 1965. AT&T argued that such a network was neither feasible, nor a better option to its own existing telephone network. But according to Baran, the telephone company simply believed that ‘it can’t possibly work. And, if did, damned if we are going to set up any competitor to ourselves.’

If AT&T had accepted the proposal, the Internet could have been a commercial enterprise from the start, and may well have ended up completely different to the one that we use today.

The Department of Defence (DoD) also got involved, but failed to seize the moment - and with it the chance of turning Baran’s project, from its early stages, into a military network. After examining RAND’s proposal in 1965, the DoD, for reasons of political power struggle with the Air Force, decided to put the project under the supervision of the Defence Communication Agency (DCA). This was a strategic mistake. The Agency was the least desirable manager for the project. Not only did it lack any technical competence in digital technology, it also had a bad reputation as the parking lot for employees that had been rejected by the other government agencies. As Baran put it:

If you were to talk about digital operation [with someone from the DCA] they would probably think it had something to do with using your fingers to press buttons.

There was, however, some truth in considering Baran’s network model impracticable. It was, at least, a decade ahead of its time. Some of its component didn’t even exist yet. Baran had, for example, imagined a number of mini computers to be used as routers, but this technology simply wasn’t available in 1965. Hence, Baran’s vision became economical only when, a few years later, the mini-computer was invented

ARPANET begins to take shape

It was only in 1969, at UCLA (not that far from Santa Monica where Baran worked), that the first cornerstone of the Internet was finally laid, and the ARPANET, the first computer network was built.

Paradoxically, what had began a decade earlier as a military answer to a Cold War threat (the Sputnik), turned a completely different kind of network.

In the initial plan for the ARPANET presented at the CM Symposium in Gatlinburg during the October of 1967, Larry Roberts, the project leader, listed a series of reasons to build the network. None of them were concerned with military issues. Instead, they looked towards sharing data load between computers, providing an electronic mail service, sharing data and programmes and finally, towards providing a service to log in and use computers remotely.

In the original ARPANET Program Plan, published a year later (3 June 1968), Roberts wrote:

The objective of this program is twofold: (1) to develop techniques and obtain experience on interconnecting computers in such a way that a very broad class of interactions are possible, and (2) to improve and increase computer research productivity through resource sharing.

During the first half of the Sixties, Licklider had pushed for IPTO grant recipients to use their funds to buy time-sharing computers. The move aimed to help optimise the use of resources and reduce the overall costs of ARPA’s project. This was, however, not enough. To be truly effective, those computers had to be linked together in a network; and that, in turn, implied the computers had to be able to communicate with each other.

In 1965 this communication problem became startlingly clear to Robert Taylor, a former NASA System Engineer, who, initially hired as Ivan Sutherland’s deputy, became IPTO’s director when Sutherland left in 1966. Taylor quickly realised that the fast growing community of research centres sponsored by his office was very complex but poorly organised.

In stark contrast with the rising sense of community shared by individual researchers throughout the country (a community fostered mainly by participating at academic conferences), each centre was barely interacting with the others. In fact, resource sharing was limited to one mainframe computer at a time. This lack of interaction was partially due to the lack of both a streamlined procedure and a network infrastructure to access remotely located resources.

At the time, if researchers wanted to use the resources (applications and data) stored in a computer at their UCLA campus, they had to log in through a terminal. This procedure became more cumbersome when the researchers needed to access another resource, for instance a graphic application, which was not loaded on their mainframe computer, but was instead only available at another computer, in another location, let’s say in Stanford. In that case, the researchers were required to log into the computer at Stanford from a different terminal with a different password and a different user name, using a different programming language. There was no possible mode of communication between the different mainframe computers. In essence, these computers would have been like aliens speaking different idioms to each other.

Taylor saw this issue as wasting funds and resources, and his direct experiences with problem were a daily source of frustration. Due to the incompatibility of hardware and software, in order to use the three terminals available in his office at the Pentagon, Taylor was required to remember three different log in procedures and use three different programming languages and operating systems every morning.

For the IPTO, and in turn for ARPA, the lack of communication and compatibility between the hardware and software of their many funded research centres was causing a widening black hole in the annual budget: as each contractor had different computing needs (i.e. it needed different resources in terms of hardware and software), the IPTO had to handle several (sometimes similar) requests each year to meet those needs.

Like Licklider, Taylor understood that, in most cases, the costs could be optimised and greatly reduced by creating an easily accessible network of resource-sharing mainframe computers.

In such a network, each computer would have to be different, with different specialisations, applications and hardware. The next step, then, was to create that network.

In 1966, after a brief and quite informal meeting with Charles Herzfeld, then Director of ARPA, Taylor was given an initial budget of $1 million to start building an experimental network called ARPANET. The network would link some of the IPTO funded computing sites. The decision took no more than a few minutes. Taylor explained:

I had no proposals for the ARPANET. I just decided that we were going to build a network that would connect these interactive communities into a larger community in such a way that a user of one community could connect to a distant community as though that user were on his local system. First I went to Herzfeld and said, this is what I want to do, and why. That was literally a 15-minute conversation.

Then, Herzfeld asked: “How much money do you need to get it off the ground?” And Taylor, without thinking too much of it, said “a million dollars or so, just to get it organised”. Herzfeld’s answer was instantaneous: “You’ve got it”.