Visiting British expert David Sweeney could have star appeal for academics frustrated by the bureaucratic inflexibility of Australia’s research auditing system and metrics-based assessments blind to the social impact of their work.

Dr Sweeney, director of research, innovation and skills at the Higher Education Funding Council for England (HEFCE), is speaking at a symposium in Canberra tomorrow about a new funding system which allows universities to present case studies of their finest research to panels of academics and non-academics.

The panels assess the impact of the research both within and beyond academia and compare this impact across different universities.

The new approach was inspired by the impact-assessing elements of a Howard-government initiative, the Research Qualify Framework (RQF), which in 2007 the Labor Government scrapped in favour of the metrics-heavy Excellence in Research for Australia (ERA) system.

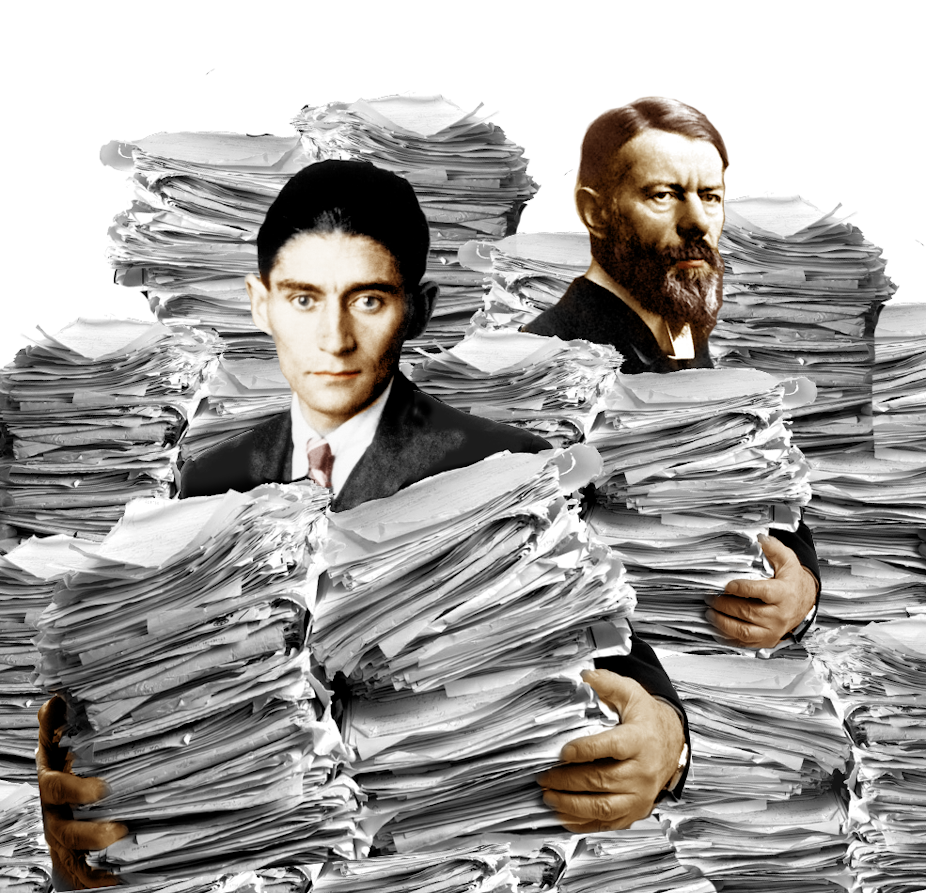

David Sweeney, Director of Research, Innovation and Skills at the Higher Education Funding Council for England (HEFCE)

How do you measure impact?

I don’t think we’re interested in measuring impact. We’re interested in assessing comparative impact from one university to another.

Absolute measures of impact are possible in narrow discipline groups [such as] health and economics, but our interest is in something that goes across different disciplines, and it’s in comparing one university against another rather than an absolute measure of impact.

I think that is, from our experience of running a pilot, is feasible.

We’ve managed to do it using basic criteria of the reach and significance of the impact, and a case study approach which indeed was pioneered by the ill-fated RQF in Australia. We’ve built on that, trialed it, and we’re now moving to full-scale deployment across 36 discipline areas which cover the whole set of disciplines in the country.

Where did the idea of comparative assessments come from?

You in Australia with the RQF were looking at it. We got on the same track because we’ve had a comparative assessment system for traditional published outputs for some time. We just amended that system using lots of help from the RQF system to allow for impacts as well as assessing quality of published outputs.

Has there been much resistance in the UK?

There’s a variety of views. Some resistance disappeared - or perhaps ‘went away’ might be a bit more tactful - when we made it clear that we weren’t assessing work of every academic. We were looking at the collective work of academic departments. And it defused some of the criticisms when we made it clear that we weren’t predicting the impact that might come from research, but looking at the evidence of impact that has been achieved - and that impact may be of research that goes back 20 years or even slightly more.

Our argument was that in any sizable academic department over a fairly lengthy period you ought to be able to do case studies on the contribution of your research to society.

We also defused the impact by making clear that we weren’t going for a metric-based approach in some disciplines, [although] some metrics will inform a judgment made by both academics and by the users of research. But in all disciplines it will be that kind of judgement; for example in humanities disciplines the descriptions of impact will be developed by people working in the subject. We have broad criteria: for example, we tried having disciplinary experts assess the contribution English literature makes to society and it works fine.

We make no comparison at all of one discipline against another. We’re only interested in comparing universities against each other in the same discipline, and again, that defused a fair bit of the argument

Let’s be clear, there are some very eminent academics, people we respect, who feel that the whole subject [assessing impact] is too difficult. What we would say is that we’ve teased out by trying it, and we think progress has been made. Certainly rather a lot of people agree with us because they’ve agreed to serve on panels, and in formal consultation processes we’ve done around all of our proposals they’ve endorsed what we’re doing.

Who asked you to come to Australia to speak about this?

The ATN [Australian Technial Network of Universities] and the Group of Eight have a symposium tomorrow in Canberra. I’ve had longstanding contacts with the ATN, less so with the Go8, although I have visted them, and they asked me out to talk about our experiences in the UK, knowing full well that the system in Australia is really quite different.

There are many distinguishing features that were built on what was planned in Australia, but how it may or may not be taken forward in Australia depends on a consideration of how the contexts differ. For example, we have a selective exercise where we only look at the very best research, whereas I think the Australian system has the character of an audit to look at all of the research done in Australian universities, because you’re very heavily metrics driven in your approach. We only use metrics to inform peer judgements.

How does the Australian system look from the outside? Does the metrics-driven approach appear to skew funding, to skew research and assessment, towards disciplines that fit well with metrics?

I don’t know. I don’t know enough about how the system is implemented in Australia. I think in terms of the design of it it’s tricky to do some disciplines using a metrics approach, and I think the Australian Research Council [ARC] have accepted that and have tried to address it again by using elements of judgement on the panels.

But we need to be clear that because ours is a selective exercise with only four published outcomes submitted per academic, we can expect our panels to actually read all the material and form their own judgement - not make a judgement that’s based on the place where the output was disseminated.

So our approach takes no account of journal impact factors, indeed of the journal [itself], although one might reasonably assume that panels will view highly most material that appears, for example, in Nature, because it’s a peer review process to get into Nature.

Again, in the consultation exercises we’ve done in the UK, there’s been very strong support for us sticking with expert peer reviews rather than going for a more metric driven [system].

We ran a metrics pilot, actually, about three years ago in the UK and we were uncomfortable with the results, in our context where we were looking selectively at the best work.

This year the Australian Government scrapped the ranking system for journals. Do you see that as a good thing that allows articles to be assessed on their own merits instead of concentrating on the journals’ rank?

It is good to look at the quality of the piece [the journal article]. It’s quite difficult to pick out individual bits of the system and comment on them because then the whole system has to be looked at together, but we’re very clear that we don’t think that journal rankings are a good idea in our context.

There has been some use of journal rankings in the past - very limited use - but we didn’t use it last time [in pilots] and we won’t use it this time.

But I understand that when you’re trying to do an enormous exercise of auditing all of the work, as Australia’s doing, you cannot possibly use our approach.

There’s no point saying there’s deficiencies in an element of it - you’re starting from a different place in Australia, and I think it shows in our country the willingness of those who are responsible for the exercise consulting the community to identify better ways forward.

Does your system reward public intellectuals, people working in the public sphere, writing for the press, etc, as opposed to academics aiming everything at journals?

Yes, it could and it probably will. Yet I do think that our system relies not just on dissemination as the criteria, but on the ideas that are being disseminated, first of all, on the ideas having been critically considered and taken up in the public intellectual point of view, but also on being underpinned by strong research.

So i think that academics who are talking heads will not fit into the system. Whereas, those who have done deep thinking and have published the fruits of their ideas and are now taking that forward in the public intellectual sense may well develop a case study that would describe strong impact. That’s perfectly possible.

In the UK, are smaller, regional, or less prestigious universities scared of being compared to wealthier, more established and prestigious universities?

Not at all - quite the opposite. Universities we would say are less research intensive - where the bulk of their money comes from teaching - won’t have research strength albeit possibly only in selected areas and they positively want to take part in this assessment exercise.

Indeed, we consulted over a proposal in 2003, I think it was, to have a two-stage process in which those [less heavyweight] universities didn’t get into the main exercise, and that was emphatically rejected. Last time we did the exercise in 2008 many smaller universities had pockets of excellence in one subject with possibly small numbers of academics, and, because that entirely drives our research funding system, they all got rewarded.

Our motto is we recognise and reward excellence wherever it is found.

We’re absolutely determined of that. Now it’s small amounts of money [that smaller universities receive], because the volume is small, but nevertheless we think every university ought to have the chance to do research should it choose to fund it in the first place, and if that research demonstrates excellence, then it should be recognised and rewarded.

How are the impact assessments tied to funding?

It totally drives core research funding. We’ve two streams of funding. We’ve project based funding which this doesn’t apply to, but roughly half of the money is delivered as a block grant to the universities for putting into new areas, of hiring young staff, or for covering research infrastructure - whatever universities want to do - and that money is wholly and completely driven by this exercise.

This is due to be implemented in 2014. Where is it at now?

We’ve done a pilot, we’ve just consulted over the fine details of the criteria, because the discipline-based panels have published draft criteria and although we haven’t yet finished analysing that consultation, it’s safe to say that the draft criteria were broadly endorsed and what we’re doing now is identifying areas where small improvements can be made based on the consultation feedback.

Should Australia move to an evaluation and funding system like Dr Sweeney’s, where universities put forward their best research to be assessed and compared by panels of academics and appropriate non-academics? Did the Labor Government make a mistake by scrapping the RQF in favour of a more heavily metrics-based audit-style system?

Comments welcome below.