The notion of “signal processing” might seem like something impenetrably complex, even to scientists. However, the fact is that most of them have already being doing it for a long time, albeit in an unconscious way. Acquiring, shaping and transforming data, cleaning it for the sake of improved analysis and extraction of useful information – all of this is what experimental science is about. And by adding ideas of modelling and algorithms, you can arrive at an ensemble of methods that constitutes a scientific discipline in its own right.

Signals everywhere

What is a signal? It is essentially the physical support of an information: audio recordings, images, videos, data collected by sensors of different nature… It includes almost all forms of data that are or can be digitized, up and including texts.

Our world is full of signals that can be processed, coming from nature as well as created by past and current technology. Mathematics is a necessary transit point for the formalisation of processing and the evaluation of its performance. Informatics offers possibilities of efficient implementation of algorithms. Interacting with each of these fields, yet irreducible to any of them, signal processing is by nature an interface discipline. Developing its own methodologies in a constant dialog with applications, from acquisition to interpretation, it stands as both singular and central in the landscape of information sciences.

A bit of history

Tracing back to the origins of signal processing, one finds Joseph Fourier (1768-1830), one of the field’s pioneers.

To establish the equations governing heat propagation, Fourier developed a mathematical method – now called the “Fourier transform” – that replaces the description of a signal in the time or space domain by another one in the frequency domain. In so doing, Fourier clearly formulated the ambition of his research when he wrote his landmark monograph in 1822, “The Analytic Theory of Heat”:

“This difficult research required a special analysis, based on new theorems… The proposed method ends up with nothing vague and undetermined in its solutions; it drives them to their ultimate numerical applications, a condition which is necessary for any research, and without which we would only obtain useless transformations.”

In a few words, everything is said about the necessity of considering physical models, mathematical methods and efficient algorithms in their ensemble.

Fourier was concerned with numerical applications, but in the 19th Century such possibilities were limited. The transformation he imagined began to have real impact in 1965, when James Cooley and John Tukey proposed an algorithm, called the “fast Fourier transform” (FFT). This work completed Fourier’s vision, and so 1965 could be considered the actual birth of modern (digital) signal processing.

Even beforehand, around World War II, signal processing began to develop, mostly for military purposes related to sonar and radar. This led to new approaches aimed at solidifying the theoretical bases of then-vague notions such as message, signal, noise, transmission and control. Within a few years emerged the communication theory of Dennis Gabor, the cybernetics of Norbert Wiener and, of course, the information theory of Claude E. Shannon.

In parallel, the French physicist and mathematician André Blanc-Lapierre developed a “theory of random functions” that allowed the modelling and analysis of the “background noise” observed in underwater acoustics. This collaboration between academia and Navy gave birth to the GRETSI association, which, in 1967, organised the first-ever congress on the emerging field of signal processing. This event, held in France, launched a series of biennial symposia. The most recent, held in September 2017, offered the occasion of celebrating the 50th anniversary of a successful meeting that reflects the vitality of its community.

Countless applications

Signal processing is concerned today by a variety of applications that go far beyond its origins, investing progressively more and more domains of science and technology. As a recognition of the importance of signal processing, the IEEE Signal Processing Society, doesn’t hesitate to define its domain as “the science behind our digital life”.

The tremendous advances in computer science since the 1960s have been instrumental in the exponential development of signal processing. The ever-growing power of computers made it possible to implement, in real time, more and more sophisticated solutions.

Even without being fully aware of its underlying presence, signal processing is at the heart of our everyday life as well as of fundamental scientific advances. For example, a smartphone is a concentrate of signal processing, operating many functions that allow us to communicate, exchange or store vocal messages, music and pictures or videos. (Indeed, file standards such as MP3, jpeg, mpeg, and many others are pure signal-processing products.)

The health field offers many examples of applications of signal processing, handling waveforms, images or video sequences under different modalities in problems as diverse as tracking of heartbeats, localization of epileptic sources in the brain, echography, or magnetic-resonance imaging (MRI). The same for major areas such as energy or transportation, where the ever-growing deployment of sensors and the need for exploiting the collected information puts signal processing at the centre of global issues such as smart grids and smart cities.

Denoising data

A common denominator of many signal processing methods is their interest in “denoising” data, “disentangling” and “reconstructing” them while taking into account the limited resolution of sensing devices. This concern can also be found in other domains such as seismics, with the purpose of imaging the underground thanks to returning echoes from emitted vibrations.

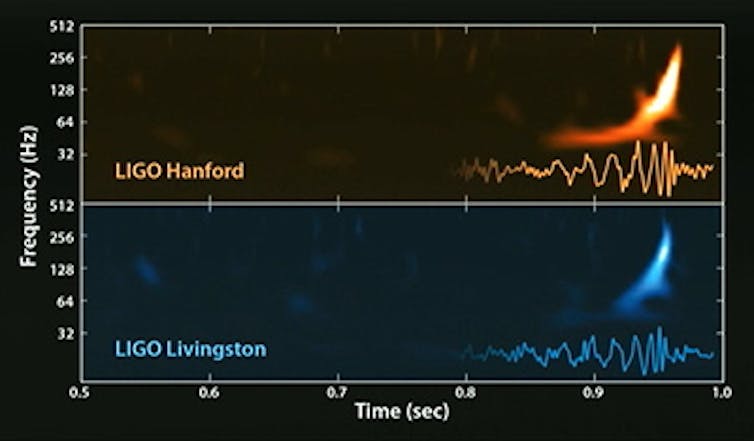

In astronomy and astrophysics, one can cite two recent examples in which signal processing proved instrumental. The first came out of the European Space Agency’s Planck mission, in which advanced source-separation techniques permitted us to reconstruct the “oldest picture” of the Universe. The second example is provided by the first direct detections on Earth of gravitational waves by the LIGO-Virgo collaborations. Beyond the instrumental exploit itself, those extraordinary achievements were made possible by advanced signal-processing algorithms that were based for part on wavelet analysis, which can be seen as a powerful extension of Fourier’s work.

And tomorrow ?

Signal processing is a key actor of the digital revolution and of information science, yet today it faces new challenges. The digital world in which we live is an ever-growing source of data: hyperspectral imaging with hundreds of frequency channels, networks with thousands of sensors (for example, in environmental science), contact data on social networks…

These new configurations can lead signal processing to re-invent itself, in connection with techniques from distributed computing, optimisation or machine learning. Yet signal processing must keep its identity and specificities, and guarantee the development of methods that are both generalisable and computationally efficient. It must also be based on an in-depth understanding of data and be fundamentally aware of its potential impact on our society.