Big data has become some sort of celebrity. Everybody talks about it, but it is not clear what it is. To unpack its relevance to society it is important to backtrack a bit to understand why and how it came to be this ubiquitous problem.

Big data is about processing large amounts of data. It is associated with multiplicities of data formats stored somewhere, say in a cloud or in distributed computing systems.

But the ability to generate data systematically outpaces the ability to store it. The amount of data is becoming so big and is produced so fast that it cannot be stored with current technologies in a cost effective way. What happens when big data becomes too big and too fast?

How fundamental science contributes to society

The big data problem is yet another example of how the methods and techniques developed by scientists to study nature have had an impact on society. The techno-economic fabric that underlies modern society would be unthinkable without these contributions.

There are numerous examples of how findings intended to probe nature ended up revolutionising life. Big data is intimately intertwined with fundamental science and continues to evolve with it.

Consider just a few examples: what would life be without electricity or electromagnetic waves? Without the fundamental studies of Maxwell, Hertz and other physicists on the nature of electromagnetism we would not have radio, television or other forms of wave mediated communication, for that matter.

Modern electronics is based on materials called semi-conductors. What would life today be without electronics? The invention of transistors and eventually of integrated circuits is based entirely on the work scientists have done by thoroughly studying semi-conductors.

Modern medicine relies on countless techniques and applications. These range from x-rays, medical imaging physics and nuclear magnetic resonance to other techniques such as radiation therapeutic and nuclear medicine physics. Modern medicine and research would be unthinkable without techniques that were initially conceived for scientific research purposes.

How the information age came about

The big data problem initially emerged as a result of the need for scientists to communicate and exchange data.

At the European laboratory CERN in 1990, internet pioneer Tim Berners-Lee suggested a browser called WorldWideWeb, leading to the first web server. The internet was born.

The internet has magnified the ability to exchange information and learn, leading to a proliferation of data.

The problem isn’t only about volume. The time lapsing between the generation and processing of information has also been greatly reduced.

The Large Hadron Collider has pushed the boundaries of data collection to limits never seen before.

When the project, and its experiments, were being conceived in the late 1980s scientists realised that new concepts and techniques needed to be developed to deal with streams of data that were bigger than had ever been seen before.

It was then that concepts that contributed to cloud and distributed computing were developed.

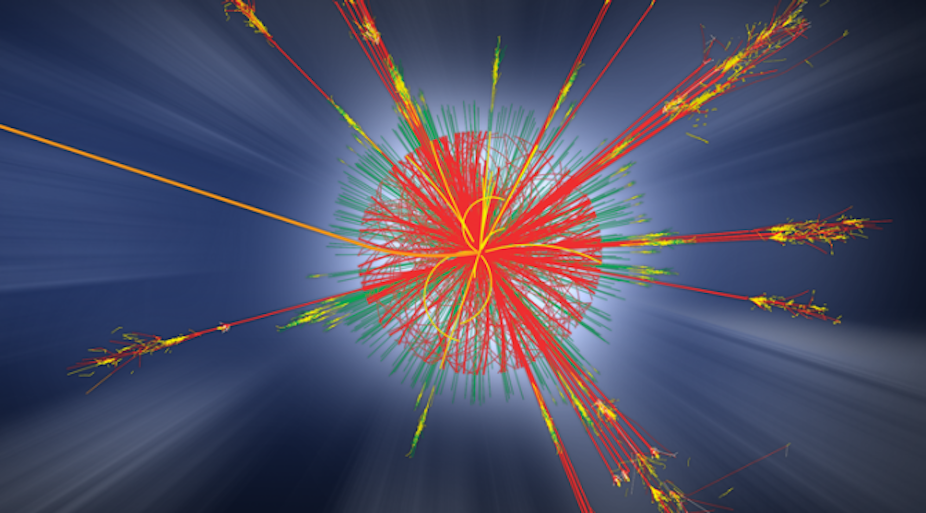

One of the main tasks of the Large Hadron Collider is to observe and explore the Higgs boson, a particle connected with the generation of mass of fundamental particles, by means of colliding protons at high energy.

The probability of finding a Higgs boson in a high-energy proton-proton collision is extremely small. For this reason it is necessary to collide many protons many times every second.

The Large Hadron Collider produces data flows of the order of petabytes every second. To give an idea of how big a petabyte is, the entire written works of mankind from beginning of written history, in all languages, can be stored in about 50 petabytes. An experiment at the Large Hadron Collider generates that much data in less than one minute.

Only a small fraction of the data produced is stored. But even this has already reached the exabyte scale (one thousand times a petabyte) leading to new challenges in distributed and cloud computing.

The Square Kilometre Array (SKA) in South Africa will start generating data in the 2020s. SKA will have the processing power of about 100 million PCs. The data it collects in a single day would take nearly two million years to play back on an iPod.

This will produce new challenges for the correlation of vast amounts of data.

Big data and Africa

The African continent often lags behind the rest of the world when it comes to embracing innovation. Nevertheless big data is increasingly being seen as a solution to tackling poverty on the continent.

The private sector has been the first to get out of the starting blocks. The bigger African firms are, naturally, more likely to have big data projects. In Nigeria and Kenya at least 40% of businesses are in the planning stages of a big data project compared with the global average of 51%. Only 24% of medium companies in the two countries are planning big data projects.

Rich rewards can be reaped from harnessing big data. For example, healthcare organisations can benefit from digitising, combining and effectively using big data. This could enable a range of players, from single-physician offices and multi-provider groups to large hospital networks, to deliver better and more effective services.

Grasping the challenge of managing big data could have big economic spin-offs too. With economies becoming more and more sophisticated and complex the amount of data generated increases rapidly. As a result, in order to improve these complex processes it is necessary to process and understand increasing volumes of data. With this labour productivity is enhanced.

But for any of these benefits to become reality, Africa needs specialists who are proficient in big data techniques. Universities on the continent need to start teaching how big data can be used to find solutions to scientific problems. A sophisticated economy requires specialists who are skilled in big data techniques.