Articles on Algorithmic bias

Displaying 1 - 20 of 70 articles

Tools used in the criminal justice system predict the risk of crime – but the scores are based on factors completely out of our control.

Bias in AI has been getting a lot of attention lately, but it’s just one aspect of the larger – and thornier – problem of fairness in AI.

Whether artificial intelligence leads to more or less energy use will depend on how we adapt to using it.

Using technology to screen job applicants might be faster than reading CVs and face- to-face interviews but the most suitable candidate could be overlooked.

The explosion of generative AI tools like ChatGPT and fears about where the technology might be headed distract from the many ways AI affects people every day – for better and worse.

If safety is the heart of the Biden administration’s executive order on AI, then civil rights is its soul.

Effective implementation of existing law can protect us from the risks posed by AI algorithms.

Data used to train AI systems often reflects the racism inherent in society.

Reform for artificial intelligence is urgent. If we cut through the hype and self-interest, Australian law can promote responsible and safe AI.

The Federal Trade Commission’s investigation of ChatGPT maker OpenAI shows that the US government is beginning to get serious about regulating AI.

Large language models have been shown to ‘hallucinate’ entirely false information, but aren’t humans guilty of the same thing? So what’s the difference between both?

Biased algorithms in health care can lead to inaccurate diagnoses and delayed treatment. Deciding which variables to include to achieve fair health outcomes depends on how you approach fairness.

Wall Street’s history of embracing high-speed algorithmic trading suggests ChatGPT will pose similar – if bigger – risks to financial markets.

One researcher’s experience from a quarter-century ago shows why bias in AI remains a problem – and why the solution isn’t a simple technical fix.

AI algorithms reinforce existing biases. Before they are introduced as routine tools in clinical care, we must establish ethical guidelines to reduce the risk of harm.

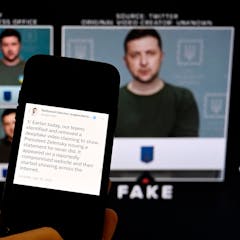

Powerful new AI systems could amplify fraud and misinformation, leading to widespread calls for government regulation. But doing so is easier said than done and could have unintended consequences.

What data privacy risk does TikTok pose, and what could the Chinese government do with data it collects? And is it even possible to ban an app?

The political content in our personal feeds not only represents the world and politics to us. It creates new, sometimes “alternative”, realities.

Searching the web with ChatGPT is like talking to an expert – if you’re OK getting a mix of fact and fiction. But even if it were error-free, searching this way comes with hidden costs.

The intersection of content management, misinformation, aggregated data about human behavior and crowdsourcing shows how fragile Twitter is and what would be lost with the platform’s demise.