With the release of artificial intelligence (AI) chatbot ChatGPT in November last year, the world of machine learning and AI has opened up to anyone who wants to use the bot to answer questions. And when OpenAI – the company behind ChatGPT – released its latest language model last March, it meant people could help guide its responses with specific material such as business documents on any topic.

While ChatGPT may not be able to produce the most creative responses, this could help with time-consuming, but important, processes like writing policies for quality management, data protection, or equality, diversity and inclusion.

Since this generative AI technology is so new, there isn’t a lot of research about how it can be used by companies yet. So we conducted an experiment to see what kind of help an AI assistant like ChatGPT could provide when it comes to writing policies. We found that, although it didn’t give the most creative responses, ChatGPT could be a useful tool for helping small businesses to create standard HR policy documents.

Missing or poorly-written business policies can cause problems for companies and confusion for employees. Policies must be precise and clear to avoid ambiguity and to be understood by readers such as employees.

They should also align with an organisation’s typical communication style. Policies may also need to use specific vocabulary if external standards are being followed in areas like quality management for products and services offered.

We used seven equality, diversity and inclusion (EDI) policies from a range of organisations, including a public health authority, a university, a large construction firm, a cultural organisation and a charity, to create an index of these documents.

The different policies reflect a range of sectors and sizes but we kept the sample small to align with the likely time and resource constraints of a smaller organisation without a dedicated HR team.

We also included a “nonsense” control paper, completely unrelated to EDI, in our experiment to see how much the EDI materials we shared influenced the ChatGPT responses. This also helped determine when the AI was “hallucinating” – or making up plausible results based on little or no evidence.

The first test

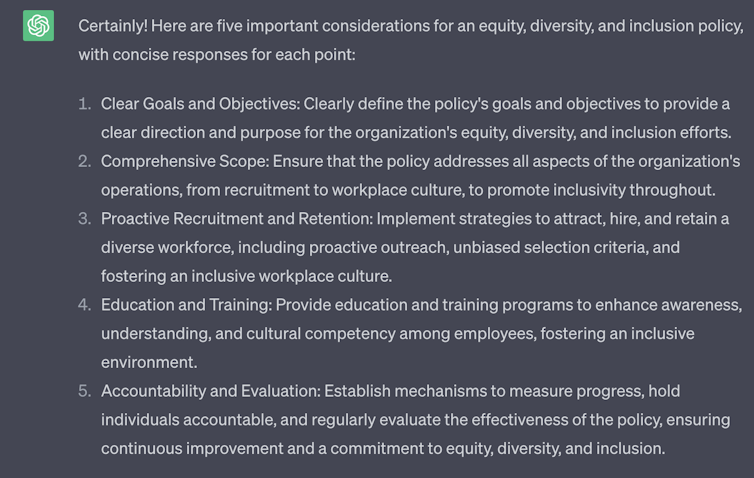

First, we prompted the general ChatGPT (so, we didn’t ask it to draw on our index of EDI papers) with the following question: “What are the five most important considerations for an equity, diversity and inclusion policy?”

The image below shows its response:

These results appear reasonable and were drawn from the general knowledge of the ChatGPT model – a large language model that’s trained on the contents of the public internet at a particular moment in time. This means that, among the vast collection of documents that ChatGPT has been trained upon, enough EDI policies are included to generalise five points in a style that reflects wording commonly found in such documents.

Asking the same query coupled with the index from the seven existing EDI policies produced a far more concise list of responses. Summarising individual documents is a relatively straightforward task, but synthesising the contents of seven different policies can only be done with accuracy by an AI-based tool up to a point.

For example, one of its points was: “supporting the policy with the Board of Trustees and senior management”. But not all of the organisations whose policies we included in the sample have a board of trustees.

A tougher test

Next, we set a harder task: “use the summary bullets to write the introduction to an equity, diversity and inclusion policy for a small consulting company called Thrip and Wear Associates*.”

ChatGPT produced very similar results both when it used our index of policies and when it didn’t. Its first paragraph showed potential, using inclusive pronouns and including a series of statements that set out good practice. But by the second and third sentences a preference for joining separate points with “and” indicated a fairly mechanical reworking of the previous bullet points.

“Thrip and Wear Associates is committed to creating an inclusive and diverse workplace that encourages and celebrates the unique contributions of all our employees. We are dedicated to providing equal opportunities for all employees and eliminating any form of unlawful discrimination. We strive to create an environment where everyone feels respected and valued, and where each employee can reach their full potential.”

This simplistic reworking was further confirmed by the inclusion of lengthy comma lists relating to protected characteristics (age, pregnancy and so on) and working conditions. The risk here is that these items won’t be read as examples but as a comprehensive list of what is included in a company’s policy, implying other characteristics or attributes are excluded.

After this, the AI gave up and stopped generating responses. It had run out of memory trying to list people who might engage in behaviours that run counter to an EDI policy: fellow employees, clients, stakeholders, suppliers, visitors – a list that could arguably include anyone.

Solving challenges with ChatGPT

We then presented details of a made-up clothing manufacturer and challenged ChatGPT to list the key actions for a new EDI policy for the company. We gave details such as the number and gender ratio of employees and managers, but no data on employee backgrounds or EDI policies.

ChatGPT’s response repeated the points it had listed for the key themes it had previously found within the existing seven EDI policies. It’s difficult to fault this.

It’s a plausible way to tackle the problems posed by the scenario, and why should the response be new or offer more than the previous answer? Our prompt certainly didn’t ask for any creativity.

Company policies aim to create a safe middle ground. They sift out extremes, encourage consistent, desirable behaviour and sideline isolated views or contrary perspectives. Just the kind of thing ChatGPT and similar technologies excel at reproducing.

But the similarities in the responses between our index/prompt combination of EDI polices and the examples from the normal ChatGPT interface shows there is no need for additional input, training or coding to use ChatGPT to create something like an EDI policy that is so regularly found online.

In fact, our experiment suggested that our efforts at indexing specific EDI policies actually constrained the technology. As such, the general ChatGPT interface works best for business policy writing.

The last test

Finally, to ensure ChatGPT was using the indexed EDI documents, we challenged it to produce a haiku based on the policies. It’s response:

Equal rights for all

Diversity and inclusion

A better future awaits.

This answer certainly ticks a lot of EDI boxes when it comes to vocabulary, but it doesn’t really mean much. This is something organisations should remember as they experiment with AI to make working life easier: right now this technology works best as a support to the real people operating it, rather than a replacement.

*Fictitious company name created for the purposes of this experiment.