The profound impact caused by the storm that began as Hurricane Sandy has turned the spotlight on many issues, including forecasts. In short: how well was Sandy forecast, and where do our forecasts need improvement?

Sandy began its eventful life as an area of low pressure in the Caribbean, with some disorganised thunderstorms popping up here and there. On Friday, October 19, forecasters at the US National Hurricane Center flagged this area as one to watch for potential development into a tropical cyclone, giving a 20% chance of “genesis” into a tropical depression within the next two days.

The forecasters’ confidence in this genesis increased steadily through to Monday, October 22, when it was classified as a tropical depression.

This example already gives us a clue as to how far forecasting has evolved. Through improved satellite images and computer models together with forecasters’ insights, a two-day forecast of the chance of a seemingly random cluster of thunderstorms organising into a tropical cyclone is now a routinely used product with considerable skill.

Over the next few days, the depression developed into a tropical storm and was christened “Sandy”, whereupon it parked itself in the western Caribbean before abruptly turning northward towards Jamaica and eastern Cuba (unfortunately causing considerable loss of life).

This change in motion was captured well by the computer models and the forecasters. By Wednesday, October 24, one of the best models (from Europe) was repeatedly suggesting Sandy would move towards the north (as is normal) followed by a surprising westward turn towards the north-eastern United States.

Other reputable models disagreed and carried Sandy on a more conventional and harmless course towards the east, over the Atlantic Ocean.

But by Thursday, October 25, many of the models converged, giving forecasters confidence (and fear) that the potential worst-case scenario could occur.

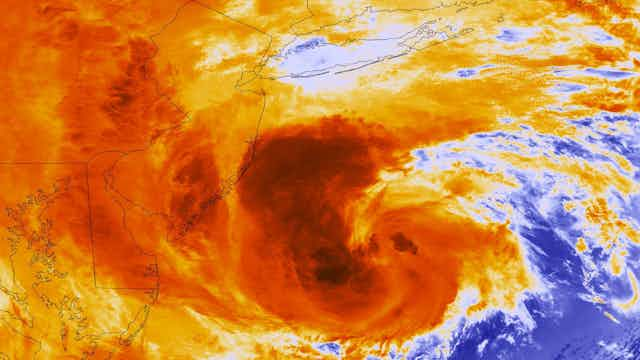

Sandy would merge with a vigorous cold low-pressure system moving eastward across the northern United States, and then take the unusual westward path towards one of the most populous regions of the country while maintaining its hurricane-force strength.

The merger and westward turn would also be influenced by a high-pressure system unusually parked over eastern Canada, another weather feature that was well predicted by the models.

These three features (Sandy, the low, and the high) would conspire to produce an impeccably-timed merger of weather systems into a gigantic storm. The rest is history.

It’s getting better

Would such an accurate forecast, four days in advance of the impact, have been made 20 years ago? Probably not.

There have been many advances. Forecasters rely on guidance from several models, run mostly by government agencies around the world.

These models solve a complicated group of equations involving wind, pressure, temperature and moisture, which are run on supercomputers.

Since the power of these supercomputers has increased by orders of magnitude over the past two decades, more accurate forecasts are achievable due to atmospheric flows being represented around the globe at a higher resolution.

Finer scale meteorological processes are captured and the representation of cloudy areas, while never perfect, has improved considerably.

But this is not the entire story. In order to know what the future will bring, we also need to know what the atmospheric conditions are right now.

This is accomplished by feeding about 20 million pieces of observational data a day into the computer model through a mathematical technique called “data assimilation”.

This technique has improved by leaps and bounds, as has the volume and quality of the observational data, particularly from weather satellites. As a result, we now have better “initial conditions” for our computer models.

Ensemble forecasting

Another technique that has evolved over the past 20 years is “ensemble forecasting”, where tens of computer model forecasts are run at the same time with different tweaks to the initial conditions and model.

A forecaster can then infer confidence in a forecast depending on whether the storm tracks are tightly clustered (high confidence) or spread out (low confidence).

During the week before Sandy’s historic impact, more and more members of the ensemble began trending away from the ocean and towards the northeastern United States, increasing forecaster confidence.

Although not perfect, there is good reason to celebrate the overall improvement of the forecasts of a tropical cyclone’s position (or track).

Unfortunately, this is not the case for forecasts of the cyclone’s wind structure and strength, rainfall amount and distribution, and storm surge.

Although the forecasts of these complicated quantities turned out to be remarkably good for Sandy, there is plenty of room for improvement.

Intensive research is being done worldwide in order to better understand the complex behaviour within the cyclone itself, and we hope that considerable inroads can be made over the next decade.

The next frontier of forecasting involves impacts:

- What structural damage will the storm surge and wind cause?

- Where will rivers break their banks?

- Where and when will electric power be lost?

- How can human and economic losses best be mitigated?

These perplexing questions will keep hurricane researchers and forecasters gainfully occupied until the next Sandy comes along.