When people talk about ‘big data’, there is an oft-quoted example: a proposed public health tool called Google Flu Trends. It has become something of a pin-up for the big data movement, but it might not be as effective as many claim.

The idea behind big data is that large amount of information can help us do things which smaller volumes cannot. Google first outlined the Flu Trends approach in a 2008 paper in the journal Nature. Rather than relying on disease surveillance used by the US Centers for Disease Control and Prevention (CDC) – such as visits to doctors and lab tests – the authors suggested it would be possible to predict epidemics through Google searches. When suffering from flu, many Americans will search for information related to their condition.

The Google team collected more than 50 million potential search terms – all sorts of phrases, not just the word “flu” – and compared the frequency with which people searched for these words with the amount of reported influenza-like cases between 2003 and 2006. This data revealed that out of the millions of phrases, there were 45 that provided the best fit to the observed data. The team then tested their model against disease reports from the subsequent 2007 epidemic. The predictions appeared to be pretty close to real-life disease levels. Because Flu Trends would able to predict an increase in cases before the CDC, it was trumpeted as the arrival of the big data age.

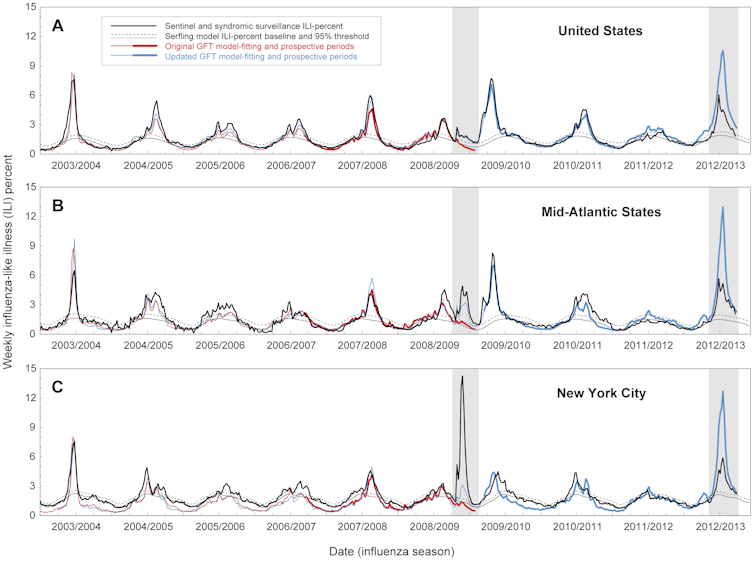

Between 2003 and 2008, flu epidemics in the US had been strongly seasonal, appearing each winter. However, in 2009, the first cases (as reported by the CDC) started in Easter. Flu Trends had already made its predictions when the CDC data was published, but it turned out that the Google model didn’t match reality. It had substantially underestimated the size of the initial outbreak.

The problem was that Flu Trends could only measure what people search for; it didn’t analyse why they were searching for those words. By removing human input, and letting the raw data do the work, the model had to make its predictions using only search queries from the previous handful of years. Although those 45 terms matched the regular seasonal outbreaks from 2003–8, they didn’t reflect the pandemic that appeared in 2009.

Six months after the pandemic started, Google - who now had the benefit of hindsight - updated their model so that it matched the 2009 CDC data. Despite these changes, the updated version of Flu Trends ran into difficulties again last winter, when it overestimated the size of the influenza epidemic in New York State. The incidents in 2009 and 2012 raised the question of how good Flu Trends is at predicting future epidemics, as opposed to merely finding patterns in past data.

In a new analysis, published in the journal PLOS Computational Biology, US researchers report that there are “substantial errors in Google Flu Trends estimates of influenza timing and intensity”. This is based on comparison of Google Flu Trends predictions and the actual epidemic data at the national, regional and local level between 2003 and 2013

Even when search behaviour was correlated with influenza cases, the model sometimes misestimated important public health metrics such as peak outbreak size and cumulative cases. The predictions were particularly wide of the mark in 2009 and 2012:

Although they criticised certain aspects of the Flu Trends model, the researchers think that monitoring internet search queries might yet prove valuable, especially if it were linked with other surveillance and prediction methods.

Other researchers have also suggested that other sources of digital data – from Twitter feeds to mobile phone GPS – have the potential to be useful tools for studying epidemics. As well as helping to analysing outbreaks, such methods could allow researchers to analyse human movement and the spread of public health information (or misinformation).

Although much attention has been given to web-based tools, there is another type of big data that is already having a huge impact on disease research. Genome sequencing is enabling researchers to piece together how diseases transmit and where they might come from. Sequence data can even reveal the existence of a new disease variant: earlier this week, researchers announced a new type of dengue fever virus.

There is little doubt that big data will have some important applications over the coming years, whether in medicine or in other fields. But advocates need to be careful about what they use to illustrate the ideas. While there are plenty of successful examples emerging, it is not yet clear that Google Flu Trends is one of them.