The Massachusetts Institute of Technology’s (MIT) Distinctive Collections archive is quiet while the blizzard blows outside. Silence seems to be accumulating with the falling snow. I am the only researcher in the archive, but there is a voice that I am straining to hear.

I am searching for someone – let’s call her the missing secretary. She played a crucial role in the history of computing, but she has never been named. I’m at MIT as part of my research into the history of talking machines. You might know them as “chatbots” – computer programmes and interfaces that use dialogue as the major means of interaction between human and machine. Perhaps you have talked with Alexa, Siri or ChatGPT.

Despite the furore around generative artificial intelligence (AI) today, talking machines have a long history. In 1950, computer pioneer Alan Turing proposed a test of machine intelligence. The test asks whether a human could differentiate between a computer and a person via conversation. Turing’s test spurred research in AI and the nascent field of computing. We now live in that future he imagined: we talk to machines.

I am interested in why early computer pioneers dreamt of talking to computers, and what was at stake in that idea. What does it mean for the way we understand computer technology and human-machine interaction today? I find myself at MIT, in the middle of this blizzard, because it was the birthplace of the mother of all bots – Eliza.

Eliza’s speech

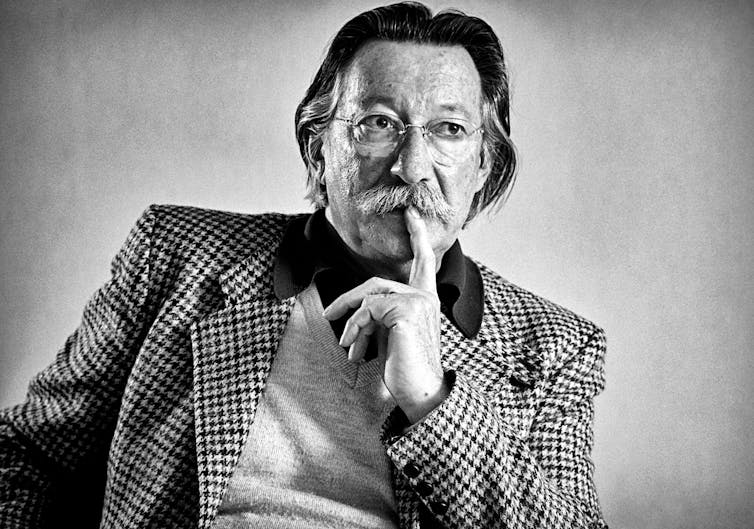

Eliza was a computer program developed by the mustachioed MIT professor of electrical engineering, Joseph Weizenbaum, in the 1960s. Through Eliza, he aimed to make conversation between human and computer possible.

Eliza took typed messages from the user, parsed them for key word triggers and used transformation rules (where the meaning of a statement can be deduced from one or more other statements) to produce a response. In its most famous version, Eliza purported to be a psychotherapist, an expert responding to the user’s needs. “Please tell me your problem” was the opening prompt. Eliza could not only receive input in the form of natural language, it gave the “illusion of understanding”.

The program’s name was a nod to the protagonist of George Bernard Shaw’s play Pygmalion (1912) in which a Cockney flower seller is taught to speak “like a lady”. Like the Audrey Hepburn musical of 1964, this Eliza took the world by storm. Newspapers and magazines hailed the fruition of Turing’s dream.

Even Playboy played with it. Eliza’s legacy is significant. Siri and Alexa are the direct descendants of this program.

Accounts of Eliza tend to focus on a Frankensteinian tale of the inventor’s rejection of his own creation. Weizenbaum was horrified that users could be “tricked” by a piece of simple software. He renounced Eliza and the whole “Artificial Intelligentsia” in the coming decades – to the chagrin of his colleagues.

But I am not in the archive to hear Eliza’s voice, or Weizenbaum’s. In all these accounts of Eliza, one woman crops up again and again – our missing secretary.

The missing secretary

In his accounts of Eliza, Weizenbaum repeatedly worries about a particular user:

My secretary watched me work on this program over a long period of time. One day she asked to be permitted to talk with the system. Of course, she knew she was talking to a machine. Yet, after I watched her type in a few sentences she turned to me and said: ‘Would you mind leaving the room, please?’

Weizenbaum saw her response as worrying evidence that: “Extremely short exposures to a relatively simple computer program could induce powerful delusional thinking in quite normal people.” Her reaction sowed the seeds for his later abhorrence for his creation.

But who was this “quite normal” person? And what did she think of Eliza? If the missing secretary played such an important role, then why don’t we hear from her? In this chapter of the history of talking machines, we only have one side of the conversation.

Back in the archive, I want to see if I can recover the secretary’s voice, to understand what we might learn from Eliza’s user. I work my way through Weizenbaum’s yellowed papers. Surely, among the transcripts, code print outs, letters and notebooks there will be evidence? There are some clues, reference to a secretary in letters to and from Weizenbaum. But no name.

I broaden my hunt to administrative records. I look in department papers and the collections of Weizenbaum’s workplace, Project MAC – the hallowed centre of computing innovation at MIT. No luck. I contact the HR office and MIT’s alumni group. I stretch the patience of the ever-generous archivists. As my last day arrives, I still hear only silence.

Listening to silences

But the hunt has revealed some things. How little organisations have historically cared about the people who produced, organised and saved so much of their knowledge, for one.

In the history of institutions such as MIT and computing more generally, the writers of those records – often poorly paid, low status women – are largely written out. Our silent secretary is the quintessential effaced, anonymous transcriber of the documents on which history is built.

The contributions of the users of talking machines – their labour, expertise, perspectives, creativity – are all too often ignored. When the model is “talk”, it’s easy to think those contributions are effortless or unimportant. But belittling these contributions has real consequences, not only for the talking machine technology we design, but also for the ways we value the human input in those systems.

With generative AI we speak of user input in terms of “chat” and “prompts”. But what kind of legal status can “talk” claim? Should we, for example, be able to claim copyright over those remarks? What about the work on which those systems are trained? How do we recognise those contributions?

The blizzard is worsening. The announcement rings out that the campus is closing early due to the weather. The missing secretary’s voice still eludes me. For now, the history of talking machines remains one sided. It’s a silence that haunts me as I trudge home through the muffled, snowbound streets.

Looking for something good? Cut through the noise with a carefully curated selection of the latest releases, live events and exhibitions, straight to your inbox every fortnight, on Fridays. Sign up here.