In a report leaked to the Australian media, Facebook has outlined how it can use an analysis of activity and sentiment of users posting on its platforms to identify their various levels of anxiety.

The report specifically talks about having analysed data on 6.4 million young people in high school, universities and the work force. The types of analysis done claims to be able to differentiate when people feel “stressed”, “defeated”, “overwhelmed”, “anxious”, “nervous”, “stupid”, “silly”, “useless”, and a “failure”.

Australian researchers, working with Facebook data, have also identified that generally, young people express “anticipatory emotions” in the early part of the week and “reflective emotions” on the weekend. Also when these users are “working out and losing weight”, or feeling that they are “looking good” with “body confidence”.

Facebook has denied that it offers “tools to target people based on their emotional state”. The research was simply done to understand how people express themselves on Facebook.

Facebook has launched a review of how this research was conducted, as it did not follow its established process to review the research Facebook performs.

This is far from the first time that Facebook has created controversy over the research it performs, without informed consent, on its users. In 2014, Facebook deliberately attempted to manipulate the emotional state of 689,000 of its users.

The report itself was apparently being used by sales representatives from Facebook as part of their pitch to one of Australia’s top four banks.

As part of a sales pitch, and not a peer-reviewed published piece of research, one has to treat the claims made in the report with a degree of skepticism.

Firstly, sentiment analysis is notoriously hard to get right when applied at the level of an individual and her posts. There is the problem of the general ambiguity of language. Someone could say “great” and mean it in either a positive or negative (sarcastic) way, for example. A sentiment analysis program would interpret it only as being positive because it doesn’t have the context and intention.

Secondly, Facebook has demonstrated repeatedly, that despite its algorithms, it is unable to identify when its users post content that is harmful or offensive, hate speech, bullying, or even indicating that the poster is carrying out physical violence or murder.

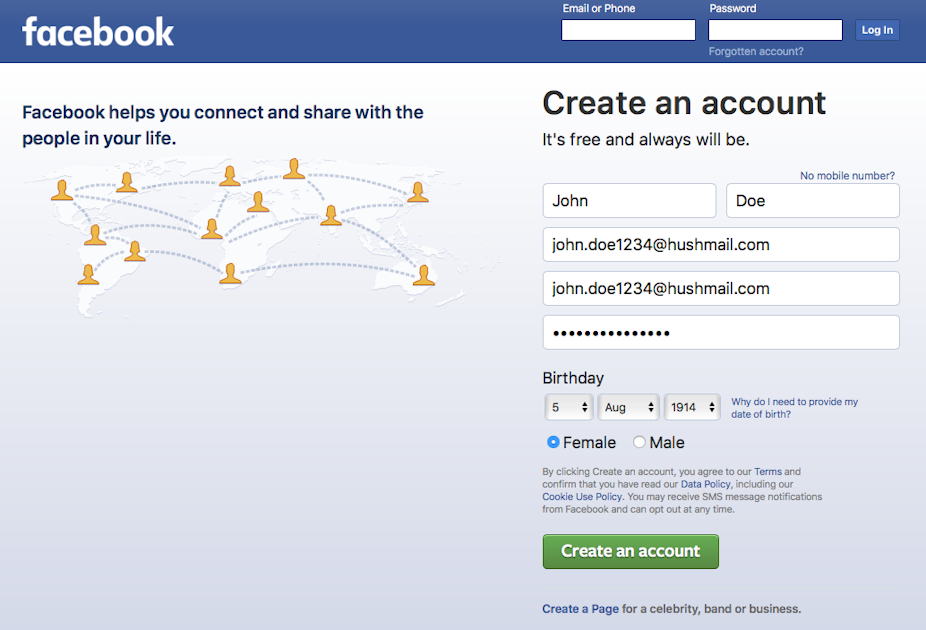

Thirdly, Facebook does not acknowledge the very real issue that the profile information of a large number of its users is false. There have been reports, for example, that nearly 40% of kids on Facebook are under the age of 13, the minimum age Facebook allows on its platform.

The fact that this research was done at all however still highlights the problem and imbalance that exists in the relationship between Facebook and its users. For Facebook users, the content is part of their own expression and their communications and connections with others. To Facebook, it is simply content to be mined and exploited and the user targeted with advertising. All Facebook users, but young people especially, need to understand this imbalance and appreciate that it isn’t OK for companies like Facebook to know their most intimate or private thoughts, or share every aspect of their lives.

To redress this imbalance, kids and young people should be taught about what is and isn’t appropriate to share on Facebook. This is actually quite hard to get people to do, because it requires conscious self-control and potentially stops people from genuinely interacting with their friends.

Another approach would be to “spoof” Facebook’s algorithms. This could be done by changing profile details, such as being older, a different gender and creating an education and employment history that are fictitious. Avoiding using the “like button” will also stop Facebook getting any sort of real sense of what a person is potentially interested in.

Finally, it is very important to not be logged into Facebook in a browser that is being used to visit other sites. In Australia and the US, Facebook will track activity even when not explicitly on Facebook itself. Use a separate browser or just the mobile app to access Facebook.

Of course, the simplest way to avoid being part of Facebook’s ongoing social experimentation with its users is to avoid using it altogether.