One aspect of the federal budget that hasn’t attracted the attention it should is the government’s plan to introduce “performance” funding for universities.

Performance funding isn’t new to Australia’s universities - funding for research has long been tied to performance measures. Between 2005 and 2009, there was a system of performance funding for learning and teaching originally introduced by Brendan Nelson. University performance is also encouraged via competition for students and research grants.

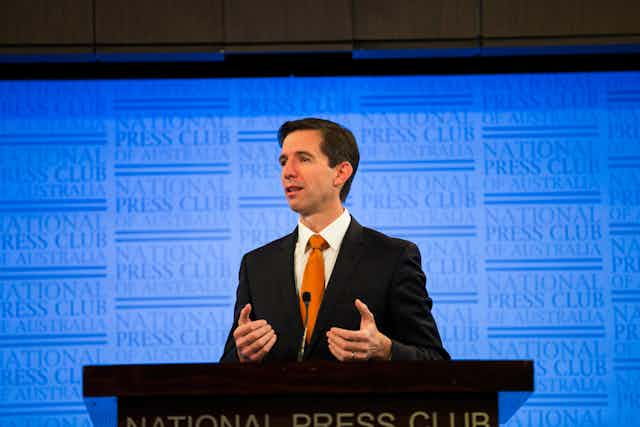

So what does this latest approach to “performance funding” add? And in a time of general funding uncertainty, what is Education Minister Simon Birmingham hoping to achieve?

The Birmingham plan

The government’s performance funding plan involves making 7.5% of higher education commonwealth grants (CGS) a pool of funds for which all universities compete. This means that $500 million that universities currently count on to fund salaries – the largest single annual expenditure of any university – and all the other annual expenditures, will no longer be predictable.

Here is the first consequence of this scheme that must be addressed. Each university will need to cut its budget further than the already announced 2.5% cut in 2018 and again in 2019.

A university budgets principally on the basis of the number of students enrolled and the average amount of money each student will bring. For domestic undergraduate students, the largest number of students in Australian universities, those dollars come through the CGS. If 7.5% of each student’s funding will not follow the student, but will flow depending on the minister’s assessment of whether a university has met benchmarks determined on a changing set of education indicators, then this money cannot prudently be included in the budget.

In 2018 then, each university must factor in the possibility of a 7.5% cut on top of the 2.5% “efficiency dividend”. In other words, a 10% cut to its funding. It will be the same in 2019, and so on.

A snowball effect

The second consequence is a sort of punitive snowball effect. If a university fails to meet relevant benchmarks, its funding is cut. It has less funding to invest in an effort to remedy the areas that have been found wanting. Indeed, the grand outcome of meeting all the benchmarks is that the university will retain the funding it once counted on to educate its students – it will not be better off.

The third consequence is the cost of reporting to the government annually for this scheme. Any scheme on which all universities will be compared for the purposes of distributing revenue will require high levels of precision and comparability. Both come with a significant cost.

None of these consequences is likely to improve the quality of Australian higher education. While the government seems to have accepted the argument that improved funding is necessary to enhance the performance of Australia’s schools, it has adopted the counter-intuitive view that Australian university performance will improve with further funding cuts.

Beyond the likely negative consequences of the shape of this proposed performance funding, what of its purpose? The government seems to believe performance funding will make universities more “accountable”. The indicators that have been mooted include information about admissions criteria, attrition and retention, employability, and student satisfaction.

Lessons from recent history

The last performance funding scheme for learning and teaching introduced by then- minister Brendan Nelson demonstrated the perils of attempting to benchmark universities with different missions and student cohorts against a standard set of indicators. In the UK, the Teaching Excellence Framework has produced extensive evidence of the eccentric and unintended outcomes of this type of a scheme.

Lest we fail to learn these lessons, here are just some of the measurement and comparability issues that bedevilled Nelson’s performance fund, or will affect this one.

Employability – How do you compare a cohort entering university from school (without full-time employment) with a cohort already in employment and studying part time? How should we measure a university’s impact on employability - how many students attain employment at the end of their degree? Do you discount those still in the same employment as when they began? What is the university’s responsibility if there is a major recession?

Attrition – How do you compare students who are mature-age or already in employment (a cohort more likely to leave university for a time) with a full-time economically and socially advantaged student cohort (a cohort that typically finishes on time)? Evidence suggests that students usually withdraw from university due to personal and financial circumstances. Comparatively few leave because of negative experience of university study. Do you discount attrition rates based on the reason for ceasing study? If not, what happens to universities that enrol disadvantaged groups or mature-age students?

Student satisfaction – How do you accurately distinguish levels of student satisfaction between universities when there are bigger variations between fields of study than between universities as a whole? What constitutes an acceptable level of student satisfaction and what is its relationship to learning outcomes?

These examples show that it is difficult to measure education performance in a way that reveals anything meaningful about university performance. It is unfair to compare universities with different mixes of subjects and students. What are the appropriate benchmarks for a university focused on serving a disadvantaged regional community compared to a university with a high proportion of postgraduates studying for global professional employment?

But the overarching question must be: What performance is the government seeking to achieve with this performance funding proposal?

University graduates have higher employment rates than non-university graduates and Australian graduates are internationally employable. The Australian university system is among the top four in the world in terms of successful completion of graduates. That means there is comparatively little real attrition from Australian universities.

Student satisfaction surveys of international students show levels of satisfaction in excess of 85% and student achievement generally at distinction or high distinction levels. In recent weeks Australia was ranked in the top 10 of university systems worldwide by U21, and 4th in the world by QS in 2016.

Running faster, going nowhere

The best possible outcome from this performance funding for a university is that it keeps the 7.5% that it would otherwise have expected. But to achieve that outcome it may have to perform as well or better than it has before, on less government funding. It will also have to do so while devoting more time and resources to reporting to government about its performance.

Every Australian university is audited annually, providing reports on single research grants and many other education programs. Universities do so while being expected to fund building maintenance, as well as their refurbishment and expansion, without any direct government grants.

Universities have achieved all this, despite having $3.9 billion cut from their funding from 2007-2011. The perverse outcome of this success has been a further funding cut of $2.8 billion. For each university, there is now the prospect that some or all of a further 7.5% will be cut to improve performance.

Performance funding must have a reason and a logic. Higher performance does not result from running faster to stay in the same place – and yet that is just what has been proposed.