Supercomputers have enabled breakthroughs in our ability to tackle a huge array of problems, from detailed studies of protein folding, to the dynamics of the Earth’s atmosphere and climate.

Current research in supercomputer architecture is poised to take us to the “exascale”, enabling systems capable of performing 1018 floating-point operations (or calculations) per second. By way of context, current home computers can perform on the order of billions ( 109 ) of floating-point operations per second.

But now, work by my colleagues and me has the potential to beat these exascale systems in a head-to-head match … by 80 orders of magnitude.

(The number 1 is the zeroth order of magnitude, 10 is the first order of magnitude, 100 is the second order of magnitude, and so on.)

Our device, which only contains roughly 300 atoms, can offer such improvements in performance because it uses quantum physics for computation.

This is a totally new way of processing information that allows enormous parallelism – that is, huge numbers of calculations happening concurrently, to solve a single computational problem.

This translates to the ability to process extraordinarily complicated problems with vanishingly small amounts of hardware.

The system we developed is a quantum simulator – a well-controlled quantum system used to mimic the dynamics of other physical systems, such as interacting electrons in a solid material.

Our system is extraordinarily efficient at solving certain problems. Chief among these is the problem of quantum magnetism – trying to understand how the collective behaviour of exotic materials, such as high-temperature superconductors, arises from quantum mechanical interactions between electrons.

This might sound like the kind of problem only a physicist would care about, and it probably is. But that shouldn’t be the case.

Getting a handle on quantum magnetic interactions between electrons in solids has the potential to revolutionise our understanding of chemistry and biology. It could also give us exceptional new capabilities to engineer designer materials for clean and efficient power distribution or generation.

When it comes to studies of quantum magnetism – or a host of other physics problems – conventional computers run into a wall pretty quickly.

This comes from the fact the computational “state-space” – the computing space needed to account for all possible combinations or connections – that must be represented for interacting quantum particles grows exponentially with the number of particles.

So, for interacting two-level systems, such as spin-½ electrons, the state-space grows like 2N (where “N” is the number of particles).

So given just 34 interacting spin-½ particles, the state-space reaches roughly 17 gigabytes (that is, 234 ≈ 17,000,000,000 bytes) – roughly the size of the local memory of the most advanced supercomputer processors (note: this is different than the “throughput” of the supercomputer).

For realistic calculations involving large numbers of interacting quantum systems (hundreds of particles, if not more), supercomputers just choke.

Thankfully, there’s another way.

Just as one may build a scale model to study complicated systems, we can build a scale model of certain problems in the quantum regime. The benefit here is that the information capacity of our system scales exactly like the systems we’re interested in studying. There’s no computational wall.

But there has been a practical one.

There have been a variety of beautiful demonstrations of quantum simulation using systems such as trapped ions and photons in the past few years.

They have revealed the computational power of this approach, but have always been well below the “threshold” of 30-40 interacting quantum systems needed for the quantum system to even hope to beat a classical computer (such as the one sitting on your desk or powering your smartphone).

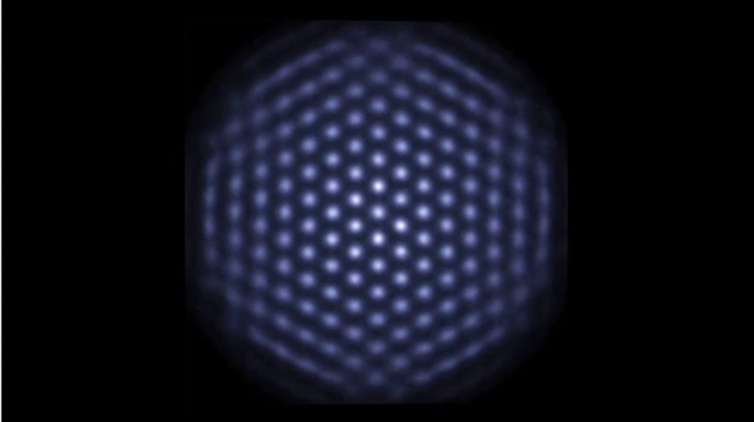

Our experiment reached more than 300 interacting quantum particles, exceeding previous related experiments by more than an order of magnitude. We’ve achieved this by relying on a totally new approach, using a naturally formed two-dimensional crystal of beryllium ions in an electromagnetic trap.

Nature gives us the underlying order that makes this system so interesting – a triangular lattice showing magnetic frustration.

But we engineer the interactions between these particles and tune them to simulate a wide range of problems. Effectively, we can program in different kinds of interactions and study what comes out, thus “solving” the problem.

The computational potential of this system is enormous. At 333 particles the computational space of interest exceeds one googol – that’s 10100 or, written out:

10,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000,000.

Representing this space in a classical computer would require the system’s size to exceed that of the known universe. Our system can do so in about a square millimetre.

Of course, we haven’t yet demonstrated the full capacity of this system. We’ve only taken the tiniest baby-steps, performing “benchmarking” experiments to test the system’s performance for very simple problems.

Showing that it can actually perform calculations that classical machines can’t will take quite a lot of future work.

Nonetheless, we’re very excited about the potential for this and related devices in research labs all over the world. Our collaborative team – at the US National Institute of Standards and Technology, Georgetown University in the US, the Council for Scientific and Industrial Research in South Africa, and The University of Sydney’s School of Physics and Centre for Engineered Quantum Systems in Australia – represents a small part of the worldwide effort to build new useful quantum technology.

We’re hopeful that in the coming decades we’ll see yet another information revolution – this time in the quantum regime.