The technological revolution has hit the doll aisle this holiday season in the form of artificial intelligence dolls. The dolls blend a physical toy with either a mobile device and app, or technological sensors, to simulate signs of intelligence.

As an education scholar who conducts research on popular toys, these dolls make me pause to think about the direction of human progress. What does it mean for children’s development, to confuse real bodies with machines?

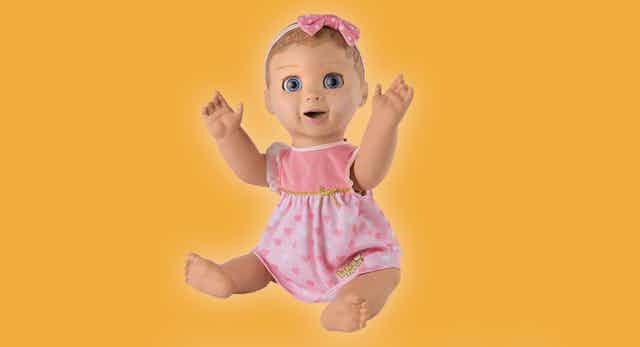

Take Luvabella. She’s supposedly one of this holiday season’s “must-have” toys. Luvabella looks at you with an astonishingly life-like expressive face, her lips and cheeks moving animatronically, her sizeable eyes blinking. She responds to your voice, laughs when you tickle her toes, plays peek-a-boo, babbles and learns words. As babies go, she’s pretty upbeat and low maintenance.

It may be that Luvabella is a natural progression in the evolution of dolls for children — from dolls that look real, to dolls that open and close their eyes when standing or lying down, to dolls that “drink” from a bottle, “pee” and talk.

She may be a natural progression of technological developments: From robotic vacuum cleaners, self-driving vehicles and automated weapons systems to autonomous dolls.

But when children are involved, questions about their safety and well-being may come to the fore. Is Luvabella problematic, even dangerous?

Children’s emotional attachment

It’s not the toy’s potential to be hacked that is the problem. “My Friend Cayla” and “Hello Barbie” were famously pulled from the shelves because children’s locations and conversations with these dolls were recorded, tracked and transmitted online.

Luvabella isn’t connected to the internet so she isn’t a privacy risk.

It’s not the impact of these tech toys on children’s imaginations either. Early childhood educators may worry that tech-enhanced toys will stifle children’s creativity, but I like to believe that children’s imaginative powers are pretty robust, and that an AI doll won’t mar their ability to pretend.

I’m comfortable — and familiar — with seeing a child derive companionship from a cuddly toy. But a robot doll is a new concept.

Children develop emotional connections with their stuffed animals and turn to them for comfort in moments of anxiety. The English paediatrician and psychoanalyst Donald Winnicott described the importance of a child’s emotional attachment to his or her “transitional object” as a means of lessening the stress involved in separating from a parent.

Children instill special qualities of warmth and comfort onto their teddy bears, dolls and cuddly toys. They imbue their action figures with vitality and make voices and movements for them.

Like Calvin with his toy tiger Hobbes, acting as if a toy or soothing object is real is what most of us would deem a healthy part of growing up — what childhood is supposed to be. It’s a form of childhood magic that makes us adults feel good. A robot doll does not.

Uncanny deception

With a regular doll, the child is the active agent in the relationship. Whatever the toy may give in return is the child’s invention. We adults know that the teddy bear or cuddly doll is not alive. At a certain level so does the child.

Children learn to distinguish between what is real and what is not around the age of three. This ability helps them engage in developmentally rich imaginative play. But this ability is not without moments of confusion.

When a robot doll responds, there may be an uncertainty for the child (and at times the adult) about whether the doll is a living creature or not. This experience of being confused between human and automaton is how German psychiatrist Ernest Jentsh explained the term “uncanny” in 1906.

The deception is unnerving. Maybe, as MIT technology professor Sherry Turkle worries, we’re socializing children to consider objects to be comparable substitutes for human interaction. It’s the same reason I’m nervous about the idea of AI caregivers for elders.

Should objects mimic empathy?

To be sure, some forms of robotic assistive technology are downright interesting — clothing that increases the strength of its wearer, robots that help a person move from bed to wheelchair.

But expecting a robot to provide companionship, caring or to somehow help a person feel less alone (see “Care-O-bot”) is cause for concern. It raises questions about what robot ethicists term “presence.”

Should an object mimic sentient presence, comfort, even empathy?

Indeed, Luvabella’s features are similar to Paro, a robot seal programmed to provide isolated elders with companionship. This small automated animal simulates pet therapy, by responding to voice direction and being stroked.

Uncharted territory

Toy companies are pressured to make artificial intelligence toys in order to keep up with societal and technological trends, and to continue to yield profit in a highly competitive business. It isn’t their job to consider the human implications.

While the technology isn’t quite there — at least not with Luvabella — to fully replace human contact, the direction of technological development is clear: Artificial intelligence is generating more and more convincing simulated human presence.

I have to ask: Is this where we want to go with our technological achievements? To develop convincing animatronic dolls to give to four-year-olds to befriend?

If so, stay tuned. We are stepping into uncharted territory.