We know buying clothes and accessories online carries a certain level of risk - what if the delivered product doesn’t fit or looks ridiculous?

But thanks to research into augmented reality you could soon find yourself “trying on” your potential purchases in a “virtual changeroom”.

For some years, shoppers have been able to see photos and movies of shops’ inventory on the internet, helping them decide what to buy.

While pictures can tell a thousand words, they don’t tell us the whole story. For some items, it is not the absolute characteristics of the product that are important - such as “do I like that lipstick colour?” - but the relative characteristic of the product: “do I like that lipstick on my lips?”.

This affects customers’ buying decisions and overall satisfaction with the product.

Enter augmented reality, which allows a person to view a virtual item in the context of the real physical world.

Augmented reality and computer vision

The concept of augmented reality centres around augmenting or supplementing an actual image in real-time with a virtual object or effect, such as artificially adding a certain shade of lipstick to a person’s lips, or placing a virtual couch in their living room.

Machines, however, do not see in the same way as humans and animals.

To a machine a digital image is simply a vast array of numbers, which computer scientists refer to as pixels. Pixels only record the relative intensity of light and do not explicitly record anything about the type of object the light ray bounced off, or its geometric placement.

Fortunately for us, the human visual system is able to take these pixel measurements of light and rapidly infer such information. In fact, the human visual system is able to do this with such ease that people often mistakenly assume that this task should also be easy for machines.

The challenge of instilling machines with such an ability is known in computer science circles as computer vision. The inability of machines to “see” how humans “see”, is the fundamental barrier prohibiting the wider deployment of augmented reality in online retail and beyond.

Semantic 3D from 2D

But over the past few years computer vision algorithms have become increasingly proficient at detecting objects of interests (such as faces, pedestrians and vehicles). However, knowing which pixel in an image belongs to what object class is not enough if we want to virtually try on a product.

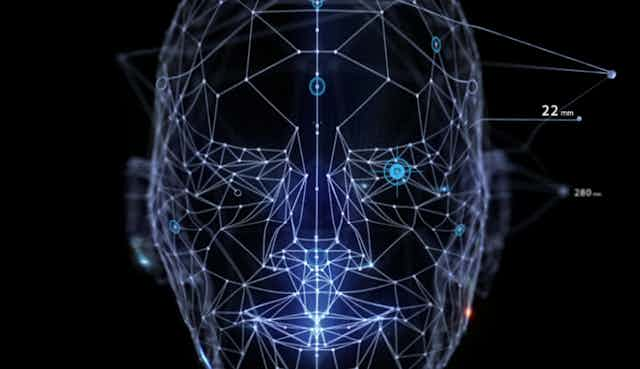

For such an application one also needs to know what part of the object a pixel belongs to (such as eyes, mouth, nose) and an estimate of the 3D camera position that captured the image. This detailed information is essential if one is to create a reasonable facsimile of how a virtual accessory or product would appear, and move in the real world.

The team of scientists and graduate students I lead at the CSIRO have been working on making this computer vision challenge a reality. In particular, we have been pioneering technology that can accurately locate landmarks on the face in 3D from just a sequence of 2D digital images.

Our recent research has taken advantage of the rapid increase in computation power on mobile smart devices along with the advancement of new machine learning methods for learning intrinsic redundancies for objects of interest (such as faces) from massive image datasets.

A recent advancement of particular note is our group’s ability to accurately estimate tens of thousands of points in 3D on a subject’s face using just a sequence of 2D digital images of the subject turning his or her head.

Previously, you would require special hardware (such as a depth sensor) to obtain this type of quality 3D scan, but with our new approach we can obtain similar quality scans using standard 2D cameras found on smartphones, tablets and laptops.

With this type of detailed 3D information we can now place a bevy of virtual objects suitable for retail - such as glasses, hats and makeup - on the face with remarkable realism.

This realism stems from the accuracy and density of the 3D information we are obtaining from the sequence of 2D digital images stemming from the smart device.

Our technology is disruptive in the sense that it now gives consumers the ability to try on merchandise and products online in a way not possible before without specialised hardware.

The future

The commercial world is now waking up to the numerous possibilities for this technology.

Just this year a number of companies have started releasing “virtual try on” apps for facial accessories such as eye glasses and make up needing nothing more than a smartphone or tablet.

CSIRO’s computer vision group has been working with these industry partners over the past two years, pioneering the development of real-time 3D facial landmark tracking software requiring only 2D video from any smart device or laptop.

Faces, however, are just the beginning of this story.

One can imagine a not-too-distant future where a person shopping for a sofa could walk into their living room and, using their smart device, virtually place a sofa anywhere in their living room, viewing it in different colours, styles and positions. With a tap of the device, they could buy the sofa, knowing whether it will fit in the room and match the rest of the furniture.

Advances in computer vision technologies will soon have the potential to not only tell us a thousand words through an image - but perhaps the whole novel as well.