I have just attended the 15th World Congress of Pain Clinicians, and while there, I went to a session chaired by one of the unsung heroes of evidence-based medicine, Prof Andrew Moore from Oxford University. As somebody who has to work continuously to avoid forgetting everything I know about statistics, I love hearing talks by people who make it all seem very clear.

Prof Moore is part of a group based in Oxford who have been instrumental in improving the quality of research in pain medicine. The philosophy of this group is to work to ensure that all patients who participate in clinical trials have their altruism honoured by ensuring that their data is collected in such a way that it can continue to be analysed years after the study is completed. If the data is collected in a consistent way by all studies in a particular field then the results can be pooled and analysed as if it was all one enormous study.

This is important for a number of reasons, not least because enormous studies are incredibly expensive and hard to organise. An equally important but less obvious reason is because in small studies involving, say 40-60 participants, statistical analysis struggles to overcome the effect of random events. The end result is almost always that small trials of individual drugs versus placebo tend to vastly overestimate the positive effect of the drug in the trial. Prof Moore’s classic paper explaining this dilemma can be found here.

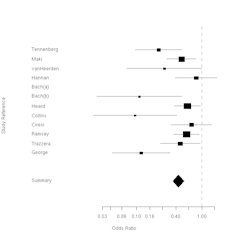

The Oxford group has recently produced an outstanding summary of all the evidence related to drugs used to treat acute pain. Unfortunately, paywall issues mean that I can’t link directly to the paper. I can however link to the reference page which you can find here. They reviewed over 350 individual studies involving more than 45,000 patients over a 50 year period. The end result was a very similar ‘league table’ to this one which is some of their earlier work.

Seeing this sort of work gives some idea why science-based health professionals are hard to impress. A single study of a treatment, even if flawlessly conducted, is not enough evidence to be convincing. The effect of the treatment needs to be huge if even a medium-sized study is to show an absolutely clear effect. As if this is not hard enough to achieve, if the same treatment is studied using different methodology for the trial the results may be wildly divergent. Consistent use of the smartest study designs means that there will be fewer avoidable aberrant results reported. The signal will be coaxed from the noise more quickly.

It can be a bitter experience to eventually realise that the ‘promising treatment’ you’ve learnt how to use was actually less effective than the old faithful stuff. It can even be very expensive and embarrassing if your hospital has shelled out a big pile of clams to fund equipment and training. A venerable adage among surgeons is that you don’t want to be the first one doing a particular procedure, or the last. But in the end, a systematic review of carefully collected and curated evidence remains the best way to decide whether treatments work, and we take them very seriously.

Such changes of evidence-based opinion occur all the time in medicine, and properly designing studies helps to weed out ineffective treatments at the earliest possible opportunity once they have entered practice. More subtly, having lots of data points means that patterns can emerge which helps you target a treatment to the people who will benefit the most, and avoid foisting it onto those who cannot benefit from it.

Statistics is hard because our brains are easy to fool. The answers may be surprising or feel wrong. This doesn’t excuse us from having to know how it works. Unlike politics or the law, medical opinion does not rely just on logic and debate, but on a consensus derived from some complicated figuring out. Once settled, it requires that you not only show we are wrong, but explain how we went wrong in the face of a mountain of well-collected data and accurate interpretations of reality.