Articles on Artificial Intelligence ethics

Displaying 21 - 40 of 71 articles

Current laws governing policing don’t take into account the capacity of AI to process massive amounts of information quickly – leaving New Zealanders vulnerable to police overreach.

What AI-narrated audiobooks tell us about reading and big tech.

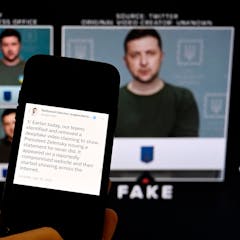

AI systems with deceptive capabilities could be misused in numerous ways by bad actors. Or, they may become prone to behaving in ways their creators never intended.

The age of autonomous weapons is upon us

Snapchat’s AI-powered chatbot malfunctioned this week, raising questions of “sentience” among users. As AI becomes increasingly human-like, society must become AI-literate.

Creating bias-free AI systems is easier said than done. A computer scientist explains how controlling bias could lead to fairer AI.

In a preprint study, researchers estimate training the model behind ChatGPT would have required somewhere between 210,000 and 700,000 litres of water.

New AI exhibit asks visitors whether they would trust an AI robot to look after their pets.

Is it possible to ‘align’ AI systems to human interest? A new plan to build an AI system to solve this problem highlights the limits of the idea.

Regardless of the input, AI image generators will have a tendency to return certain kinds of results. This is where the potential for bias arises.

Strengthening democratic values in the face of AI will require coordinated international efforts between industry, government and non-governmental organizations.

Companies that want to avoid the harms of AI, such as bias or privacy violations, lack clear-cut guidelines on how to act responsibly. That makes internal management and decision-making critical.

As a society, everyone is motivated to regulate AI development. For individual companies, though, the opposite is true.

Transparency and accountability must be a priority to prevent discrimination.

Computer scientists are overwhelmingly present in AI news coverage in Canada, while critical voices who could speak to the current and potential adverse effects of AI are lacking.

Generative AI is designed to produce the unforeseen, but that doesn’t mean developers can’t predict the types of social consequences it may cause.

Pausing AI development will give our governments and culture time to catch up with and steer the rush of new technology.

Powerful new AI systems could amplify fraud and misinformation, leading to widespread calls for government regulation. But doing so is easier said than done and could have unintended consequences.

When OpenAI claims to be “developing technologies that empower everyone,” who is included in the term “everyone?” And in what context will this “power” be wielded?

Experts largely agree that AI with human-level capabilities is not that far off. How will this change out relationship with machines?